|

By Emma Meyers

A few weekends ago, I found myself sitting in a darkened theater in Chelsea at the Imagine Science Film Festival watching a short film called Anosmia. It was about – you guessed it – anosmia, the lack the sense of smell. The people interviewed could not smell for a varietys reasons: some had congenital anosmia and could not conceive of a world with smells, or even what a smell was. Others described in heart-wrenchingly honest detail the accidents that damaged their brains rendered their world scentless. With Thanksgiving on my mind, I was eagerly anticipating the mouthwatering aromas of sweet potatoes and pumpkin pie that would welcome me home and envelop me in a cozy, blissed-out holiday daze for four days and therefore, not surprisingly, I was horrified by the thought of not being able to smell those smells ever again. Or, I suppose, it was more that thinking about losing any my senses that made me uneasy. Uneasy not in a “How will I cross the street if I’m blind?!?” practical sort of way, but in a deep (don’t laugh) existential, “What do I have if I don’t have my senses?” way. If I can’t perceive something – be it a sight, a smell, a sound, a touch – it effectively doesn’t exist for me, so to lose one of my fundamental ways of understanding things – of understanding how things exist on a very basic level – felt like it would be losing part of my world, and, weirdly, of myself. If my plight sounds familiar, that’s probably because you’ve heard it before. Descartes was getting at the same thing, though much more eloquently, in his struggle to reconcile his perceptions of his own consciousness and, ultimately, his existence. His “I think, therefore I am” is a hotly debated philosophical axiom and a compelling hypothesis for the daring neuroscientists brave enough to swim in the murky water that is the study of consciousness. It’s the premise my musings boil down to – namely, that we are made up of our thoughts, our perceptions, and consciousness as we know it is contingent on our being capable of continuously sustaining these thoughts and perceptions; we think, therefore we are. If Descartes and I are on the same page here, then science has our backs, sort of. In doing some research (that is, intense googling) in hopes of validating or assuaging my worst anosmic fears, I came across a little area of the brain called the claustrum: a thin, curvy layer of gray matter tucked away under the cortex, one on each side. Interestingly, this waif of a structure forms connections with innumerable cortical loops, creating cross-talk connectivity with most of the cortex. All sorts of information converges here – audio, visual, motor – and its role as this integration center has lead scientists Francis Crick (of DNA-helix fame) and Christof Koch to propose that the claustrum is the “conductor coordinating a group of players in the orchestra, the various cortical regions” – essentially what allows the nutty aroma, hot temperature, liquid consistency, brown color, and bitter taste perceived while drinking coffee to be interpreted as a whole, the experience of drinking coffee, rather than a constellation of sensations. Anatomically and physiologically, the claustrum is ripe to be picked out as the seat on consciousness by careful future research. Is the claustrum the “Cartesian theater” in which our only view of the world is played out for us? Maybe. Maybe not. Honestly, we don’t really know, and science is still in the early stages of figuring out how to experimentally manipulate the claustrum so it can be studied. The idea has been proposed before, though, and disputed by scientists and philosophers alike (particularly vocal among them is the philosopher Dennett, who opposes the reductionist argument for consciousness but, unfortunately, whose writings I’ve had a difficult time actually getting my hands on). What if the claustrum is what gives rise to the experience of consciousness? What if this thin, oscillating network is essentially what defines our world, our existence and the existence of things around us? What are the implications? I, for one, have mixed feelings. On one hand, as a student of neuroscience who is daily astounded by the complexities of the brain, discovering the seat of consciousness would be an unprecedented victory for science, not only in terms of uncovering the secrets of the facet of neuroscience most shrouded in mystery, but in proving that our brain, our intellect, is capable of teasing even itself apart, and can analyze and understand anything that comes its way. On the other hand, though, is my apprehension with the reductionist take. To think of everything I am as the binary output – fire, or don’t fire – of a single network is unsettling. It’s my anosmic nightmare of waking up without a sense of smell and finding that the world is actually no more than the arithmetic sum of my neuronal firing and that, with my sensory inputs altered, my consciousness is diminished, the world is diminished, and, ultimately, my perception of myself is diminished. But who knows what the implications are, if there is even enough evidence to be considering the implications? I pose these questions because I don’t – we don’t – know the answers, and, moreover, we don’t know if science will ever reveal them to us. So, I’ll leave this open ended: what do you think?

0 Comments

By Ian MacArthur

Insulation plays a crucial role in our daily lives. It keeps our homes warm in winter, prevents our coffee from rapidly cooling, and protects our bodies from the cold. Yet another sort of insulation plays an indispensable part in our well-being from moment to moment. This is the insulation of our nervous system, a role carried out by the layer of proteins and a fatty acids known as myelin. The myelin layer facilitates the efficient transmission of nervous signals throughout the body. Without it, transmissions would slow and communication between nerves would cease to occur. Myelin degradation is a key characteristic in the pathology of adrenoleukodystrophy, a rare sex-linked genetic disorder. Adrenoleukodystrophy, or ALD, is a member of a family of diseases known as peroxisomal disorders. These disorders are characterized by the inhibited function of the peroxisome, the organelle responsible for much of the cell’s fatty acid metabolism. In ALD, failure to breakdown very long chain fatty acids (VLCFA) results in the destruction of the essential myelin layer. ALD is a consequence of a mutation in the ABCD1 gene located on the X chromosome, the maternally inherited sex chromosome. The gene codes for a transport protein responsible for conveying VLCFAs to peroxisomes where they are broken down and disposed of by the cell. The mutated protein is unable to transport VLCFAs, leading to fatty acid build up in nervous tissue. When this occurs, VLCFAs interact with the myelin and cause this crucial insulator to degrade, thereby slowing nervous signals and crippling the adrenal gland. The most severe form of ALD appears in children as early as four years of age. The first symptoms to appear are those characteristic of adrenal insufficiency, including aggression, poor vision, and seizures. As myelin degradation progresses, sensory, motor, and neurological function decline, eventually leaving the patient in a vegetative state. Death occurs within five years of the onset of symptoms. Although childhood ALD is the most common form of the disease, related illnesses have been observed in middle-aged adults. Adrenoleukodystrophy is a recessive X-linked disorder. The X and Y sex chromosomes are so called for their role in determining the sex of a child. Because they receive only one X and one Y chromosome, ALD is much more prevalent in males than in females, who receive two copies of the X chromosome. This is because females must inherit two mutated X chromosomes in order to develop symptoms while males must only inherit one. Although no cure for the disease has been developed, treatments are available that may delay the onset or mitigate the severity of symptoms. A mixture of oleic and erucic acid known as Lorenzo’s Oil has been shown to postpone the onset of ALD in pre-symptomatic children by lowering VLCFA levels in blood. Bone marrow transplants have also provided effective treatment for childhood ALD, and current experimental techniques that couple bone marrow transplantation with gene therapy have shown to be promising. Despite these developments, adrenoleukodystrophy remains daunting to cure in patients already exhibiting symptoms. Nevertheless, progress will ceaselessly be made in the lengthy effort against this tenacious killer. By Alexander Bernstein

Each year, nearly 200 million people come in contact with Malaria. The World Health Organization (WHO) establishes that as of June 2011, this disease is the fifth leading cause of death in the world among low-income countries, accounting for 5.2% of all deaths or nearly half of a million people each year. Such devastation is mostly concentrated in Sub-Saharan Africa as the Malaria Consortium organization relates that nearly 89% of the near 900 thousand million malaria-caused deaths occur in this region. Although less poignant than the toll on human life, the financial burden of this disease is also consequential. While an estimated 1.7 billion dollars are spent each year to combat malaria, this number only constitutes a third of the financial commitment that the 2009 WHO World Malaria Report suggests is required to effectively lower the disease’s exposure by 75% by the year 2015. As with any ailment, it is important to understand how it functions. What causes malaria, and why is it so devastating? The answer can be found in an unlikely partnership between the female Anophelesmosquito and the Plasmodium parasite. After about a week incubation period in the mosquito, the parasite becomes readily transmitted to a human host. Once inside a new subject, the Plasmodium, the most dangerous variety of which is P. falciparum, resides and multiplies in the liver from anywhere between several weeks and several months. Then, the parasite invades the red blood cells and its presence becomes manifest. For the fortunate individuals with uncomplicated malaria, ailment is generally limited to fever, swelling, and typical flu-like symptoms. For the less fortunate, however, severe malaria takes hold, and fatal blood abnormalities and organ failures are not uncommon. Although progress has been made dealing with this disease, most current treatments focus on the prevention of heme crystals through the introduction of competitive inhibitors. However, such treatments that attempt to assist the immune response are becoming less effective as the parasite continues to evolve slight differences. A new methodology is necessary. Luckily, researchers from Imperial College London and CNRS of France think they might have the answer. These scientists have determined what they define as the “Achilles Heel” of the parasite: its transcription process. Two separate transcription inhibiting molecules have been determined. Initial ex-vivo experiments have shown that the introduction of these molecules attacks the parasite during its longest life stage and is therefore incredibly effective at killing the Plasmodium. Primary Imperial College London Researcher Matthew Fuchter is extremely optimistic about future prospects as he explains that early results indicate the new treatment’s ability to “rapidly kill off all traces of the parasite, acting at least as fast as the best currently available antimalarial drug.” Importantly, these novice molecules also address the issue of resistant parasites, as they appear to effectively deal with even the most resilient and rapidly adapting Plasmodiumstrains. Fuchter and his colleagues from France have been extremely pleased with these results and maintain that a cure for Malaria will be found within the next decade. By Nate Posey

By now Americans have had a little over two weeks to adjust their circadian rhythms to the seasonal ritual of turning back the clocks– unless they live in Arizona, that is. Yes, since the late 1960’s, the Grand Canyon State has quietly neglected Daylight Savings Time thanks to a federal exemption. Such an anomaly represents one of the myriad obstacles of communication and commerce on a global network spanning twenty-four time zones, but while you might pause now for a few seconds to decide if your call will be a rude awakening for your friend in Flagstaff, the minor inconveniences we now face may well pale in comparison to the difficulties of interplanetary contact. In Arthur C. Clarke’s Imperial Earth, the human race has seeped out of its terrestrial cradle to colonize the solar system, establishing permanent habitations as far as Saturn’s moon Titan. With a rotational period of over 380 hours, Titan is scarcely amenable to the twenty-four hour day; the attempt to synchronize the moon with Earth’s time scheme robs local time of any significance, as the sun may be rising, setting, or anything in between at noon on a given day. Fortunately for the denizens of Clarke’s novel, the reliance on artificial lighting in the subterranean cities of Titan allows them to set whatever length of “day” they please. Surprising as it may seem, we have already entered the era of interplanetary timekeeping. Thanks to rovers Opportunity and the recently landed Curiosity, scientists at NASA have been tracking the time of day at various locations on Mars for years. Knowing the local time enables mission operators to conduct regular data transfers earth-side in a way that allows for the rovers to perform their missions under predictable lighting and temperature conditions. To account for the 24-hour and 39-minute Martian day, NASA scientists simply scaled the length of the Martian hour and minute up to allow for the establishment of the twenty-four familiar intervals. While the scheme has been accepted without complaint by the red planet’s few lonely robotic residents, the need for variable time conversions might well prove maddening for any future inhabitants who lack a computer for a brain. Not only will Martians be forced to constantly update the time discrepancies between their local clocks and those of the specific geographic region of Earth they’re trying to contact, they will have to deal with the hopeless prospect of correlating the months of their 669-day calendar with those on Earth. Thanks to the economic and political primacy of Earth, though, it is likely that Greenwich Mean Time will remain the standard of choice for official interplanetary transactions in the foreseeable future, and it will be some time before any extraterrestrial settlements are autonomous enough to require the use of a local calendar. Somehow I can’t imagine the first manned research station on Mars establishing all that many local holidays, although I wouldn’t say the same about world records (Guinnessmay consider revising its books’ titles). By Emma Meyers

To everyone that hates math (read: humanities majors): remember how awful the anticipation of a math text was? The flip-flopping of your stomach, the dry lump in your throat, the almost ritualistic under-your-breath repetitions of formulas as you took your seat and watched the papers being handed out, and the frenetic dreams of solving equations the night before the test? Well, all of those trigonometry-related trips to the school nurse may have been warranted – a recent study from the University of Chicago found that the anxious anticipation of having to math is actually coupled with activation of pain pathways in the brain. Researchers used functional MRI scanning – a technique that detects differences in brain blood flow in order to image changes in activity – to look at subjects’ brain while they performed challenging math and verbal reasoning tasks. The subjects in were presented with the while in scanners, and each problem was preceded by a color-coded cue so that the subject would know which type of task would be presented next. When shown these cues, subjects with “high math anxiety” – essentially, those who reported feeling more anxiety during math-related activities – showed activation of brain regions strongly involved in the perception pain, especially in an area known as the posterior insula, which is implicated in the direct sensation of physical pain. The activation was less marked in the performance of the actual math task, so it is just anticipation, not the math itself, that hurts. The authors, Lyons and Beilock, point out that their findings indicate that we might have to start changing how we think about why things are painful. Generally, scientists look at pain – whether physical or emotional – as being evolutionarily advantageous. Feeling physical pain when we touch something hot lets us know it is dangerous, and feeling emotionally hurt after social rejection motivates humans try to get along with groups, which are advantageous for protection and survival. Math, however, is not only relatively novel in human history, but also a culturally acquired activity that is basically evolutionarily irrelevant. The neural response seen here indicates that it might be necessary to look beyond evolution to understand why we perceive things as being painful. Still, no paper is perfect, and brain imaging studies like this often leave us with as many questions as they answer. For one, the authors acknowledge the possibility that the areas activated by the math cue are not generating the sensation of physical pain, but actually responding to the visceral discomfort of psychological anxiety over math. Even if this was the case, they claim, their finding indicates a physical mechanism underlying math-anxious individuals’ aversion to math. So, math haters everywhere, this is your chance to get out of your next test with a doctor’s note– the exam literally hurts. Read the full study here. When you live in a city like New York, it’s astonishing how quickly your definition of “stars” changes from “brilliant multitude of silvery flecks spattered across the inky dark night sky” to “the windows at the top of skyscrapers that look really tiny and far away, and airplane lights.”

For those of us that can no longer imagine what stars look like, tonight’s Leonid meteor shower should be a good refresher. The Leonids, named after the constellation Leo, from which the meteoroids appear to radiate, is associated with the comet Tempel-Tuttle. As Earth, reeling through space at high speeds, passes through a cloud of debris from the comet, viewers on the ground are graced with the sight of thousands of shooting stars streaking across the sky. Years when the Leonid is particularly strong, viewers have reported having to brace themselves as they watched, for the feeling of Earth speeding incomprehensibly fast through the darkness was so strong. So, if you’re still foggy and would like a crash-course in these “star” things I keep talking about, of just want to get a glimpse at the Leonid over our neon city, join CSR and the Columbia astronomy department tonight, Friday, November 16, from 6-8 pm on the 14th floor of Pupin for food, fun, and star gazing. Astronomy department head Joseph Patterson and faculty member Helena Uthas will be there to help us understand meteor shower and to let us view it through the observatory’s telescope (!!). By Ian MacArthur

Each day, the human body’s wondrous capacity for regulation enables us to carry out our necessary tasks in the midst of an ever changing environment. Our ability to endure climates both scorching and frigid testifies to the body’s great control over its internal state, a quality termed homeostasis. Cellular processes are adapted to maintain the homeostatic balance required for the body to function in a vast range of environments. Although external conditions may vary a great deal without causing a human being much harm, even the slightest change in the body’s internal state can be catastrophic. Such is the case for the human regulation of copper, a micronutrient which in excess of a few milligrams per day can do great internal damage. While the body’s homeostatic balance of copper is maintained through the concerted effort of a variety of proteins, patients of Wilson’s Disease are denied this crucial regulation. First diagnosed by Samuel Wilson in 1912, Wilson’s Disease is a genetic disorder characterized by the buildup of copper in the body’s tissues, primarily of the liver and brain. The ultimate consequences of this excess include liver failure, dementia, schizophrenia, and other grave ailments. Mutations in the ATP7B gene located on chromosome 13 are the underlying cause of defective copper metabolism. The ATP7B protein which the gene codes for contains six copper binding sites, making it a key player in the elimination of copper from liver tissues. The defective protein in patients of Wilson’s Disease is unable to transfer cellular copper to the Golgi apparatus where it is prepared to be excreted by the cell. The result is the inability of the liver to eliminate copper and the metal’s consequent cellular buildup. Patients may suffer from a variety of neurological, psychiatric, motor, and other symptoms. Dementia, insomnia, and seizures may result, as well as depression and schizophrenia. Muscle spasms can occur, and patients may have difficulty walking. One of the most distinct signs of Wilson’s is the presence of a brown ring around the iris, a feature called a Kayser-Fleischer ring, which is the result of copper deposition in the eye. The most serious symptoms of Wilson’s are hepatic in nature and include cirrhosis of the liver that can result in fatal failure of the organ. Wilson’s Disease is an exceedingly rare illness, with a global prevalence of only 30 cases per million people. In order to inherit the disease, two mutated genes must be received by an individual from their parents, making Wilson’s a recessively inherited genetic disorder. A carrier of Wilson’s with only one defective copy of the ATP7B gene will not develop the disorder but has a 50% chance of passing the gene onto their child. A second copy must be received from the child’s other parent in order to develop symptoms. Although patients with Wilsons’s are unable to biologically regulate their copper levels, the illness can be effectively treated by medicinal copper regulation. Drugs such as penicillamine and trientine are able to bind to copper through a process termed chelation and help eliminate it through urine. If taken throughout a patient’s life, these drugs can help to completely suppress disease symptoms. Such is the wonder of human homeostatic potential, that when our bodies fail to cope with a problem, our minds devise an effective solution. By Alexander Bernstein

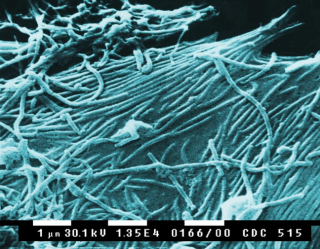

If there is one disease that you don’t want to get, it is Ebola. Know in the scientific community as Ebola Hemorrhagic Fever (also Ebola virus disease), this viral infection derives its namesake from the Ebola River in the Democratic Republic of the Congo where it was first documented in 1976. The virus itself is a member of the RNA virus family known as the Filoviridae. While there are five documented subtypes of the virus, only four have been found to infect humans: Ebola-Zaire, Ebola-Sudan, Ebola-Ivory Coast, and Ebola-Bundibugyo. A nightmare for epidemiologists and medical professionals, the virus has among the highest case fatality rates of any human disease, with infected individually typically dying of massive internal bleeding and hemorrhaging. Certain outbreaks of the virus such as those in 1976 and 2003 have seen the mortality rates reach up to 90%. Further problems arise from the difficulty to diagnose the disease, as early stages of the infection present very generic symptoms such as weakness, sore throat, and the presence of a rash. Since the kill rate is so high, no carrier state exists, and contamination from human to human is thought to only be spread by means of bodily fluid contact, the 1977 International Colloquium on Ebola Virus Infection deemed the disease to have a relatively low potential of widespread epidemic. Regrettably, that may no longer be the case. As of October 26th, 2012, 52 cases of Ebola, of which 35 are laboratory confirmed, have appeared in The Democratic Republic of the Congo, as reported by the World Health Organization (WHO). NPR reports that the death toll is at 31, with WHO spokesman Tarik Jasarevic noting that this number will likely rise in the near future as he states, “We can expect an increase in the number of cases as more people are tracked.” The official continues as he tells reporters, “I want to stress that this is a serious outbreak, and there is a risk of the Ebola virus spending.” A different official from the very same body, while stationed in the nation’s capital city of Kinshasa, confides that the outbreak may very well be “out of control” as he warns “if nothing is done now, the disease will reach other places and even major towns will be threatened.” Although the Centers for Disease Control and Prevention explains that no known cure or vaccine for the disease is in existence, the current strain in effect has been identified as Ebola-Bundibugyo, which had a 34% mortality rate in an earlier outbreak. Much caution must be taken with the current outbreak however, as multiple cases have been confirmed dangerously close to the city of Isiro, which boasts a population of over 150,000. As such, the consequences of the virus breaking out into a full-fledged epidemic in this region would be catastrophic as far more unfortunate victims would meet their end. Indeed, if the virus enters the city’s hospital system, as is typically the greatest danger in an Ebola outbreak, then many more than the 200 unfortunate individuals who perished in the 1976 epidemic -the worst confirmed Ebola epidemic to date – would lose their lives. By Nate Posey

According to the International Energy Statistics database, the world consumed a whopping 505 quadrillionBtu’s of energy in 2008, and global demand is still climbing swiftly. While policy experts have proposed numerous solutions to the impending generational crisis involving increased drilling for oil and natural gas, the steady expansion of wind and solar, and the development of smarter grids for greater efficiency, those ideas are all pretty boring. In serious times such as this, we must be willing to look to the truly innovative solutions– the fictional ones. Since the 1950’s, nuclear fusion has been the golden standard of fictional energy generation. It was to fusion that the Wachowskis turned in an attempt to gloss over the incomprehensible plot point of machines using human bodies as an energy source in The Matrix, fusion that drove Otto Octavius to trade Spiderman for Harry Osborn’s bizarre, baseball-sized chunk of tritium in Raimi’s Spiderman II. With an inexhaustible fuel source and virtually none of the environmental risks of nuclear fission, fusion is the veritable Holy Grail of renewable energy. Ever since the development of the hydrogen bomb, the tantalizing possibility of cheap, limitless energy has loomed just around the bend. Thanks to recent developments in computing and high-energy plasma physics, this possibility may be– er, well, even closer around the bend. The International Thermonuclear Experimental Reactor (ITER), the world’s largest Tokamak reactor and the result of a tremendous multinational collaboration, holds promise as the first fusion experiment designed to produce a net gain in useable energy. The reactor uses massive superconducting coils to generate a magnetic field capable of confining its 150 million degree Celsius deuterium-tritium plasma, the first of its kind. Slated to initiate its first plasma burn in 2020, ITER is currently under construction in Cadarache, France. Even if the ITER initiative succeeds, however, the road to full-scale production of commercially viable fusion energy may be a long one, indeed– endless, perhaps. In Isaac Asimov’s Robot series, the human race largely bypasses nuclear fusion in favor of orbiting solar relay stations, massive platforms that capture direct solar radiation (without atmospheric interference) and transmit the energy earth-side in the form of highly concentrated beams. In Asimov’s Prelude to Foundation, the galactic capital of the distant future fills the bulk of its energy needs through a sophisticated system of deep-earth heat sinks. While neither of these methods seems to be a likely successor to fossil fuels in the next century, the nature of political, economic, and ecological forces makes long-term predictions of our energy dependence nearly impossible. Fusion technology may well be the dream of today, but it might pale in comparison to the reality of tomorrow. So who knows? I personally can’t wait to start paying utility bills to use an artificial sun, but then again I might have said the same thing in 1998 about seeing Star Wars in 3D… By Emma Meyers

There is a magical mirror on the third floor of Schermerhorn. It stands alone, full length, against the wall in the ladies’ room, reflecting back images that are more beautiful than real life. I swear, this mirror has the uncanny ability to make your legs look longer, waist look smaller, and all your clothes fit you perfectly. I’m not the only person to notice this – without fail, someone is always standing before it, fixing her hair, adjusting her outfit, or just generally ogling at the image staring back at her. So, this morning as I shouldered my bookbag and watched the latest mirror gawker (and maybe try to get a glimpse of myself around her as I passed), I wondered what about this mirror, and mirrors in general, that allows us see something so different from reality when we’re staring back at ourselves? Through a variety of brain imaging studies looking at how subjects identify photographs of themselves and discriminate between their own faces and those of others, a slew of brain regions have been implicated in self-recognition. In addition to general face recognition areas like the fusiform gyrus of the temporal lobe, researchers at the University of California have found that a specific network of the right inferior frontal and parietal lobes is active when subjects view their own faces. When viewing photos of their own faces morphed with those of others, the more “self” they perceived in the images, the more active their frontoparietal network was. But, even with our own faces mapped out in our brains, we’re not entirely foolproof when it comes to self-recognition. In fact, psychologists at the University of Chicago and University of Virginia have shown just how vulnerable we are to our own deception. When asked to identify a picture of themselves in a lineup of images of their faces morphed to look more or less attractive, experimental subjects tended to select attractive morphed faces as their own. This phenomenon, known as self-enhancement, was found to be linked with implicit – but not explicit – measures of self-worth. Essentially, our brains automatically and unconsciously distort what we see in the mirror, and it seems that our personal self-concepts are incongruent with how both others and the camera lens construe our looks. Mirrors can deceive the brain in much more complex ways than by just enhancing our faces, as well. Take University of California, San Diego researcher Dr. V. S. Ramachandran’s mirror box: a contraption designed to trick the brain into believing that mere reflections of limbs are actually a part of the body. The box, simply comprised of a vertical mirror reflecting one hand and blocking the other from view, was originally created as a means of providing pain relief for amputees experiencing internally generated but very real pain in their amputated (or “phantom”) limb. When the reflection of their intact hand was aligned with the perceived location of their phantom hand, patients reported feeling like the reflection was actually their missing limb, and that movements of their good hand reflected back were perceived as movements of the phantom. These strong physical sensations show us just how powerful the visual system and how easily what we see can override reality in the brain. So maybe it’s not the mirror after all. With all self-enhancement and strange visual-sensory attributions our brains are capable of, maybe the too-good-to-be-true images that mirror shows us exist only in our minds, neural composites of angles and light and an unconscious bias that we’re just that good-looking. Our brains are tricking us, not the mirror itself. But then again, if our only experience of the world is what our mind shows us, is it truly trickery when we see what’s not there? Maybe the reality is that it’s all smoke and mirrors. |

Categories

All

Archives

April 2024

|