|

By Alex Bernstein

Solar energy is often thought of as a means of powering large machines and structures. Solar panels on homes, schools, and factories are able to capture enough sunlight to provide for the energetic costs of these buildings in an efficient and environmentally sustainable way. Now, scientists are beginning to use solar energy to power systems on the molecular level. Researchers at the University of Cincinnati have developed an organic, solar-powered nano-filter able to remove toxins in water with unmatched efficiency. The need for high-performance filtration stems out of the presence of unwanted antibiotics and carcinogens in rivers and lakes that arrive there via runoff from farms and chemical plants. Antibiotics in water harm aquatic ecosystems by selecting for the survival of antibiotic resistant bacteria and by reducing populations of other microorganisms. The biodiversity of these ecosystems is thus reduced while the production of antibiotic resistant bacteria rises, posing the threat of higher disease incidence in humans. The new filter is composed of two bacterial proteins arranged in a sphere. One of the proteins, AcrB, functions as a pump to selectively uptake carcinogens and antibiotics in water to be stored within the sphere. The other, Delta-rhodopsin, captures light energy and transfers it to AcrB to sustain its pumping action. Together, the two proteins are able to filter antibiotics out of water much more efficiently than standard filters. Whereas traditional carbon filters are able to absorb around 40% of the antibiotics in water, the nano-filters are able to absorb up to 64%. The success of the unit may depend on the bacterial origin of its protein pump. In a normal bacterial cell, AcrB helps eliminate foreign substances, including antibiotics, by pumping them out of the cell. “Our innovation,” comments David Wendell, one of the authors of the report, “was turning the disposal system around. So, instead of pumping out, we pump the compounds into the proteovesicles.” The ability of AcrB to selectively eliminate antibiotics from bacterial cells is a product of evolution that has allowed for the emergence of highly antibiotic-resistant bacteria. The protein filter, then, stands as an example of a highly beneficial product derived from a nominally harmful source. Another advantage of the filter is that the antibiotics it collects are able to be recycled. Substances captured in carbon filters cannot be reused because they must be heated hundreds of degrees to be regenerated. The new filters, on the other hand, can be treated after use to retain the collected antibiotics. Moreover, the nano-filter is able to eschew the high energetic costs of carbon filters by relying solely on solar energy. The protein filter lies at the intersection of biotechnology and alternative energy. Although the idea of a solar powered filter with the diameter of a human hair sounds absurd, its creation testifies to the highly original and innovative technologies that can be produced from natural products. With nature as its guide, mankind can indeed achieve the improbable.

0 Comments

By Alex Bernstein

In the United States alone, there are currently over 100,000 people waiting in line for a donor kidney. While dialysis machines can perform the essential functions that those with advanced kidney diseases so desperately need, only a new donated kidney can prove to be a permanent solution. Sadly, with the dearth of donors, the wait proves too long for many. Now, however, thanks to groundbreaking efforts by Dr. Harold Ott and his team of researchers at the Massachusetts General Hospital, the wait may soon be over. By as early as 2008, Dr. Ott and his colleagues developed a method for stripping clean the organs of recently deceased individuals with the help of a detergent solution, followed by using a patient’s stem cells to rebuild the organ. Dr. Ott explains that the process allows them “to preserve the blueprint of the organ” while washing it empty of the donor’s cells. The organ becomes “a mere shadow of the original tissue,” he continues. By getting rid of the old cells and replacing them with those of the receiving patient, this procedure allows for the creation of an organ that, essentially, is already the receiving patient’s – the procedure avoids the risk of rejection since the tissue is no longer foreign. Yet, up until quite recently, researchers struggled to create functioning organs as they found it quite difficult to get the new cells into the right spots. Finally, after much experimentation, Ott and his team found the working formula: a pressure gradient that essentially sucks the new cells into their appropriate positions. The first tests of these organs were performed in vitro, as the scientists took prepared rat livers and placed them into body environment simulating chambers which included all of the necessary nutrients that would be found in a real mammalian system. Remarkably, after just a few days of growth, the engineered tissues began to make rudimentary urine. Ott and his team finally succeeded at creating functional kidneys. Excited by these results, the researchers at the Massachusetts General hospital moved onwards with their experiments, placing the organs into an animal model. The successful transplantation of these organs from the bioreactor into the rats was a leap forward for the entire field. “The reason why I’m most excited about this recent publication is because it shows the platform character of this technology,” explains Ott. What’s so great about this procedure is that it is by no means limited to kidneys. “The technology can be applied to any tissue, any organ, that can be perfused by its own vascular system,” the lead researcher notes. Although human trials are still quite a way down the road, the impact of this work suggests a bright future in the field of organ engineering. By Nate Posey

“Yesterday’s Future Today” – a biweekly column exploring the various predictions of classic science fiction and how they’ve stood the test of time. Earlier this year, Lucasfilm announced its decision to scrap the slated 2013 release dates for the 3D conversions of Star Wars: Attack of the Clones and Star Wars: Revenge of the Sith. As a lifelong Star Wars fan who has devoted a ponderous amount of time and money over the years to the series’ films, books, toys, games, apparel, and collectibles, I greeted this news with a resounding “Meh.” Leaving aside the fact that these films were from the prequel trilogy (which I happened to enjoy, despite its numerous shortcomings), I simply had no interest in the tedious and migraine-inducing “technical marvel” of 3D motion pictures. I have had the misfortune of sitting through two 3D films thus far, and while the repeated waves of nausea I felt seem to have obscured the experiences somewhat in my memory, the words blurry, disorienting, and gimmicky come to mind. The 3D movie craze may well turn out to be a red herring, but it is merely a symptom of a very real and enduring phenomenon of our technological age: the quest for greater immersion. Through surround-sound speaker systems and high definition televisions, motion-controlled game consoles and microphone headsets, the denizens of the twenty-first century have sought repeatedly to heighten the illusions of their sporadic breaks from reality. On the extreme end, role playing games like Blizzard’s World of Warcraft or Bioware’s Mass Effect series enable players to reincarnate themselves in rich and luscious environments which are realistically shaped by the consequences of their decisions, but even non-gamers find ways to occasionally lose themselves in the drama of their favorite television series. Not surprisingly, the future of escapism litters the pages of escapist futurism. Wait, is that right? Well, whatever, we’re just going to look at some science fiction. The concept of virtual reality appears in countless works of science fiction both new and old, but one of the most stirring portrayals of all time was in the 1999 film The Matrix by the Wachowski brothers. By feeding electrical impulses directly into the brain, the titular Matrix bypassed the need for helmets or bodysuits entirely, creating a virtual environment of perfect realism for its captive users. Such direct cerebral stimulation may be much closer to our world than you might think. A team led by Dr. Theodore Berger at the University of Southern California has managed to successfully install a computerized hippocampus into the brain of a rat which is capable of generating and transmitting artificial memories to the animal. Thankfully such technology has not yet fallen into the hands of any self-aware machine overlords! Author Philip K. Dick provided a far different glimpse of mankind’s future fix for escaping reality in his novel The Three Stigmata of Palmer Eldritch (1964). In Dick’s rendering of the early twenty-first century, Earth’s citizens are press-ganged into service as colonists for the small settlements throughout the solar system. Isolated from human civilization in tiny hovels and beset from all sides by the inhospitable elements of an alien planet, the reluctant colonists turn to the psychotropic wonder-drug Can-D to briefly escape the reality of their wretched lives. Coupled with a customizable layout, the drug enables the colonists to embody a personal avatar safely ensconced in an earthly paradise for a precious thirty minutes or so. Unlike more familiar narcotics or hallucinogens, Can-D provides users with a crystal-clear experience scarcely distinguishable from reality and leaves them clear-headed and fully capable of enjoying their brief snatch of nirvana. Whether the ultimate means are biological or digital, there can be little doubt in my mind that man’s quest for a virtual reality, for a precious escape from the soul-crushing drudgery of [insert day job], will continue well into the future. Oh, and this is just a personal shout out to another science fiction connoisseur (you know who you are): Philip K. Dick is a credit to his profession, to be sure, but he couldn’t lay a finger on the Big Three (Asimov, Clarke, Heinlein), and we all know that there is only one writer who could lay any reasonable claim to being appended to that list (*cough* Ray Bradbury *cough* *cough*). By Emma Meyers

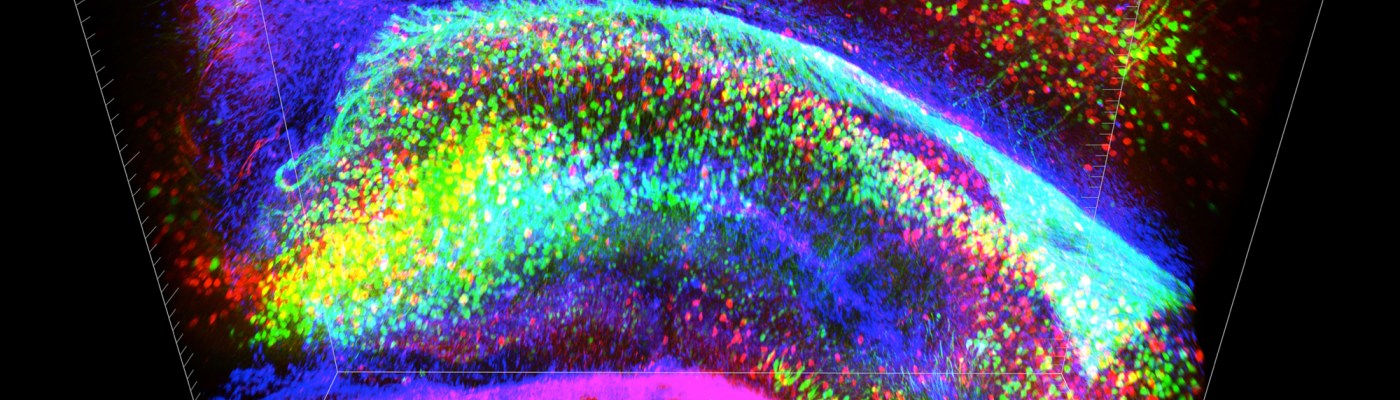

Ever since the Obama Administration announced its backing of a new $100 million initiative to map the connections of the brain’s 85 to 100 billion neurons, skeptics have questioned whether neuroscientists have the tools available to achieve the massive feat. Now, researchers at Stanford University are reporting in the journal Nature a new technology that renders a whole mouse brain transparent, with neural connections lit up by fluorescent antibodies to create a psychedelic 3D neuronal map. The process, developed by a team working under the direction of neuroscientist and psychiatrist Karl Deisseroth, is called CLARITY. It promises a new level of understanding of neural connectivity in a whole-brain context and can potentially be applied to make great strides in uncovering the mechanisms of still-mysterious mental disorders like schizophrenia and autism. Normally, brain tissue is opaque. Tightly packed fat molecules that make up the lipid bilayer encapsulating each cell act as walls that are impenetrable to molecular probes and scatter light, making imaging a challenge. The makers of CLARITY aimed to circumvent this problem by removing the troublesome lipids while still retaining the network’s structural integrity and important biomolecules like proteins. The transparent brain is created by infusing the tissue with hydrogel monomers, or hydrophilic molecules that interact with proteins and nucleic acids, but not lipids. When the hydrogel-tissue hybrid is heated, the monomers link together to form a stable three-dimensional network, locking the non-lipid components of the cells in place. Then, the loose fats are washed away with an ionic detergent and electric current, leaving a life-size, biochemically intact, and entirely clear brain. The standard methods researchers use to examine neurons involve cutting brains in extremely thin slices so that the individual cells can be seen through a microscope, separating cells from their neighbors and making piecing together each and every connection a painstaking and error-prone task. CLARITY is, therefore, a vast improvement when it comes to understanding connectivity in context. The transparency and mesh-like consistency of the hydrogel network make it the ideal antidote to the opacity of membrane lipids. The pores allow for deeper penetration of labeled antibodies than could be achieved when solid membranes were intact, and translucency provides for better visualization of deep structures under microscopes. These characteristics lend CLARITY to the study of proteins and other function-associated gene products, a valuable tool for researchers looking to identify the structural aspects of the active circuits they modulate in live animals. And, boding well for its practicality as a research tool, clarified brains are reusable; strong enough to endure the removal of injected probes, multiple proteins can be traced in the same brain. With all its promises, though, CLARITY is not without its limitations. For one, the process has only been implemented in full on mouse brains. While small portions of human brains have been successfully clarified, more work remains to be done before entire human specimens, much larger and more complex than mouse brains, can be made transparent. Additionally, as the authors of the journal article point out, CLARITY is not a replacement for microscopy. While it is invaluable for garnering a broad understanding of three-dimensional connectivity, it is a tool intended to be used in conjunction with standard practices of electron microscopy for high-resolution observation of the details of the synapses. Still, CLARITY holds a great deal of promise for neuroscience and other areas of molecular biology. As lead author Dr. Kwanghun Chung tells the New York Times, tissues other than the brain – the liver, heart, and lung, for example – can be clarified as well. With further refinement, CLARITY holds the key to a more complex understanding of the molecular intricacies of the human body than ever thought possible. For a video of Dr. Deisseroth explaining CLARITY, see Stanford School of Medicine’s website here. By Ian MacArthur

Anyone who has enjoyed the temporary stimulation provided by today’s popular energy drinks has experienced the metabolic effects of L-carnitine. For a brief amount of time after consumption, the nonessential amino acid may enhance the body’s breakdown of fat and help to eliminate dangerous free radicals. The compound has also been noted for its cardiovascular benefits by allegedly helping to deter high blood pressure and atherosclerosis. An assessment of the scientific literature concerning L-carnitine done by Vanderbilt University concluded that there is no apparent risk associated with elevated consumption of the amino acid. However, new research into the metabolism of L-carnitine by microbes in the human intestine has shown that elevated L-carnitine levels can directly contribute to the decline of heart health. The study sheds light on how consumption of red meat, in which L-carnitine is abundant, contributes to heart disease. In a paper published in Nature Medicine this past week, researchers at the Cleveland Clinic found that some bacteria in the human intestine metabolize dietary L-carnitine to produce trimethylamine-N-oxide (TMAO), a metabolite implicated by a 2011 study as a contributor to heart disease. The study involved examining the L-carnitine levels in the blood plasma of over 2,500 patients, measuring L-carnitine and TMAO levels in omnivores, vegetarians, and vegans, and analyzing the effect of the amino acid on mice deficient in intestinal microbes. The results of the study indicated that consumption of L-carnitine is associated with higher levels of carnitine-consuming bacteria. That is, an omnivore who consumes red meat will increase the amount of bacteria in his stomach that consume L-carnitine and, in turn, the bacteria will help elevate his TMAO levels. Perpetuation of this positive feedback loop will allow TMAO levels to soar and place the carnitine consumer at risk of heart attack and stroke. TMAO levels in vegans and vegetarians did not increase after ingesting L-carnitine; an observation likely related to the finding that carnitine-consuming bacteria were found in smaller numbers in the intestines of these patients. The leader of the research group, Stanley Hazen, explained the crucial link between diet and the composition of intestinal microbe populations. “The bacteria living in our digestive tracts are dictated by our long-term dietary patterns,” Hazen explained. “A diet high in carnitine actually shifts our gut microbe composition to those that like carnitine, making meat eaters even more susceptible to forming TMAO…Vegans and vegetarians have a significantly reduced capacity to synthesize TMAO from carnitine, which may explain the cardiovascular health benefits of these diets.” These recent findings have important implications for the future of dietary supplements. Although L-carnitine supplements are currently marketed as a harmless addition to one’s diet, the new research will hopefully help people reconsider the benefit of consuming the amino acid. While research on the effects of dietary supplements on the human body is still in its early stages, it is important that supplement users exercise discretion when taking untested compounds. As in the case of L-carnitine, just because a molecule is natural does not mean it is safe. By Alex Bernstein

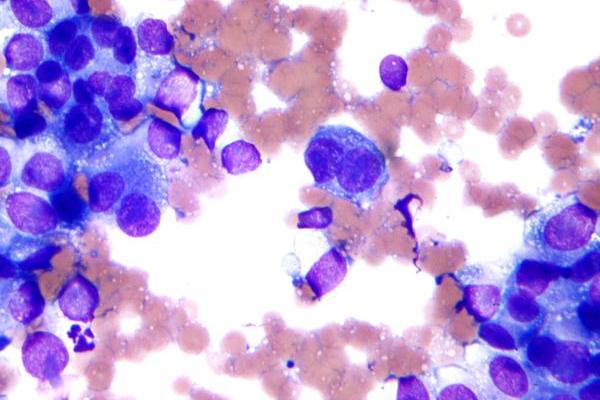

Until quite recently, oncologists, despite all of the progress that has been made in the field, could at best make educated guesses when asked about survival chances by patients. When Cassandra Caton, an 18-year-old tragically diagnosed with a large melanoma growth inside her eye, asked, “Am I going to die, is my baby going to have a mommy in 5 years?” doctors were only able to make a prediction based on the shape and size of the tumor. Now, however, Caton has a much better option. A new genetic test, pioneered by J. William Harbour of Washington University, could provide her with a remarkably accurate prognosis. Identifying which one of the two chief types of genetic patterns the patient’s melanoma falls into, this novel test is able to place the patient’s cancer into either Class 1 or Class 2. The former represents the milder tumor, which is essentially cured through surgery, while the latter is indicative of a more aggressive malignancy, which claims the lives of about 70 to 80% of patients within five years. Remarkably, the test is even detailed enough to predict where future potential cancer growth might appear during metastasis (the spread of cancer), accurately predicting that the liver is the organ to be watched. So far only available for ocular melanoma, the test represents great progress in the oncology community. Dr. Michael Birrer, specialist in ovarian cancer at the Massachusetts General Hospital, is more than enthusiastic about the procedure, calling it “unbelievably impressive,” going as far as saying, “I would die to have something like that in ovarian cancer.” Similar prediction procedures are already in the works for other cancers, such as those of the blood and bones. However, as is usually the case with new science, ethical issues are raised. People often have trouble finding out if they have the genetic makeup for certain incurable disorders such as early Alzheimer’s or Huntington’s disease. The same can be said for such a cancer test, which will either essentially assure patients of their survival, or signify almost certain death. Some doctors even opt to not offer the test, suggesting that there aren’t many benefits it can provide in terms of treatment. A melanoma researcher at Massachusetts General, Dr. Keith Flaherty explains that although the test is able to separate people into two prognostic groups, “There is no treatment yet that will alter the natural history of the disease.” Yet despite such concerns, most doctors do believe in the test, and as Dr. Harbour notes, the overwhelming majority of patients ask for it. When dealing with something like cancer, people want to be as informed as possible. Harbour explains that he gives patients “as much information as [he thinks] they can handle.” Dr. Harbor continues to express his support for the test as he suggests that a Class 2 identification can provide the doctor with the opportunity to better manage the melanoma by knowing what to expect and what tests to run. Regardless, the procedure signifies great progress and hints at just how important genetic testing will be to the future successes in this field. As for Ms. Caton, despite the large size of her tumor, the cancer turned out to be Class 1, a very positive diagnosis that would not be possible without the test. By Ian MacArthur

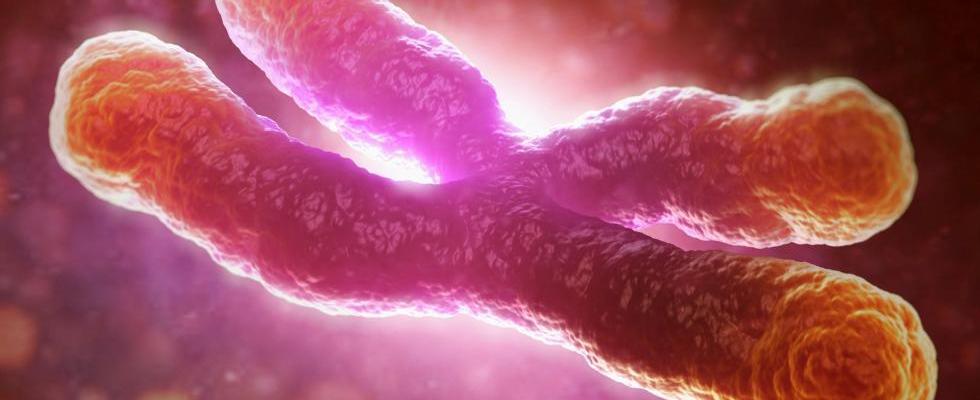

In 1978 Elizabeth Blackburn and Joseph Gall published their work on telomeres, the regions on the ends of chromosomes comprised of simple, repetitive DNA sequences. This work constituted the first venture into elucidating the nature of the structures believed to play an important role in aging and cancer. Now, scientists at the the University of Copenhagen have successfully mapped the enzyme telomerase in the human genome, an accomplishment that has important implications for our understanding of cancer. Each time that a cell divides, a small portion of the DNA sequence located at the telomeres is lost. When the entirety of a chromosome’s telomeric DNA has been used and vital genetic material becomes lost in replication, the cell will cease to divide. The lifespan of a cell, then, is limited by the length of its telomeres. Further research revealed that telomeres were able to be regenerated with the help of the enzyme telomerase, prompting sensationalists to exalt the enzyme as a “fountain of youth.” Although the relationship between telomerase, telomeres, and cellular senescence (biological aging) is not fully understood, it is widely acknowledged that telomerase plays an important role in the cellular physiology of cancer. Of the myriad types of human cells, only sex cells, stem cells, and some white blood cells are known to express telomerase. Cancer cells, however, are able to produce the enzyme and thus can indefinitely increase the number of times they are able to divide. Now that the gene that codes for the enzyme has been mapped, scientists will be able to better understand how variations in the telomerase gene may predispose someone to a certain type of cancer. One of the researchers involved with the project, Stig E. Bojesen, commented, “We have discovered that differences in the telomeric gene are associated both with the risk of various cancers and with the length of telomeres.” Another interesting finding, according to Bojesen, was that “the variants that caused the diseases were not the same as the ones which changed the length of the telomeres. This suggests that telomerase plays a far more complex role than previously assumed.” It is hoped that mapping the gene will lead not only to breakthroughs in cancer treatment, but also improve the ability of clinicians to identify cancers early based on variations in a patient’s gene. The paper published in Nature Genetics announcing the mapping of telomerase represents a truly global effort in cancer research. The project took over four years to complete, involved over 1,000 scientists from around the world, and used blood samples from over 200,000 patients. To date, it is the largest research project conducted in the field of oncological genetics. Although the project has revealed a great amount of authentic and meaningful information, Bojesen notes that, “as is the case with all good research, our work provides many answers but leaves even more questions.” By Nate Posey

I like to imagine myself as being something of a science fiction connoisseur. As such, I was quite shocked and more than a little embarrassed to discover that until a few months ago I had scarcely even heard of what has been dubbed the single greatest science fiction book ever written: the Hugo-winning, Nebula-winning, all-time best-selling Dune by Frank Herbert. The novel details the ascent of young Paul Atreides, heir to the ducal fief of the desert planet Arrakis, who marshals the native populace against his father’s usurpers to reclaim his rightful inheritance. Herbert is a masterful storyteller, and the universe of Dune is simply unparalleled in its richness and authenticity. Nevertheless, several of the story elements seemed somewhat out of place in this alleged masterpiece of science fiction. Paul’s mother, the Lady Jessica, is a Bene Gesserit witch, a member of the galaxy-spanning order of mystics whose prophecies and powers of mental suggestion play a key role in Paul’s development from a young princeling into the legendary Muad’Dib, the messiah of Arrakis. Although the mystical elements in Dune never truly cross into the overtly magical realm of sword-and-sorcery fantasy, many of the characters’ telepathic interactions are nevertheless suggestive of supernatural forces. Most peculiar of all, however, is the political order in the universe of Dune; the galaxy is held in a tenuous balance of power between the Padishah Emperor and the various Lords of the Great Houses who have parceled up the various star systems into feudal estates. Such medieval politics seems glaringly anachronistic in a futurescape replete with shield generators, laser rifles, and superluminal spacecraft. To be sure, Herbert was neither the first nor the last to invoke such a topsy-turvy alternate universe. Isaac Asimov’s Foundation series details the emergence of a second galactic empire from among the thousands of fractious “barbarian” kingdoms which sprang up after the collapse of the first, and George Lucas’ Jedi Knights would be more at home in Camelot than in a galactic republic. Such works often straddle the line between the genres of science fiction and fantasy, blending arcane tropes into an otherwise technologically advanced society. Unlike many works of so-called “hard” science fiction, which often explore the inevitable complications arising from the development of new technologies, the fantasy-esque works allow for the retelling of simpler and more familiar arcs: the conflict between order and chaos, between good and evil, etc. Such works represent escapism at its finest, where the classical struggles of heroes can play out on the most spectacular of stages. The enduring appeal of such works may very well be due more to their traditional elements of fantasy rather than to their science fiction motifs. Today’s readers vastly prefer the genre of fantasy to that of science fiction, with the former outselling the latter by as much as 10 to 1 in the domestic market. Author Graham Storrs suggests that this shift in popular taste may be the result of a general disillusionment with the once lofty promises of “scientism” (excessive belief in the power of scientific knowledge and techniques), noting that many of the developments which dazzled spectators with untold possibilities in the twentieth century (spaceflight, nuclear power, etc.) have fallen flat in the twenty-first. He writes that: These days scientism is a minority religion, science is seen as a golden calf to be cast down, and the scientists we once placed on pedestals are fallen idols. We’re disappointed and disillusioned. We didn’t get answers. We didn’t get squat except Windows 7, genetically modified corn, and electronic trading. Personally, I am still optimistic about the future of science fiction. Although most people have become rather jaded by the apparent trajectory of so-called progress (“The new iPhone 5 is 20% lighter than the last one, you say?”), history shows us that such trajectories are almost always misleading; no one ever really predicts the next big earth-shattering advance until it’s right on top of us. I am confident that the next few decades will offer us a vision of a future that is once more exciting enough to speculate about without writing in warriors, wizards, or (God forbid) vampires. Who knows? The future of 2050 might just well resemble the future of 1960 more than the future of 2010. |

Categories

All

Archives

April 2024

|