|

By Yameng Zhang

Edited By Thomas Luh “To Study the Brain, a Doctor Puts Himself Under the Knife.” Staring at the title, I was shocked, and then touched, but at last shrugged and murmured to myself: “Is this a mad scientist in real life?” This title is from a news article about Phil Kennedy, a neuroscientist. Kennedy was enthralled with neuroscience from a very young age, so he stayed in labs for years developing “invasive human brain computer interfaces,” with which neurons can grow on an artificial wire connecting the human brain to a computer. (Sounds like the idea of a mad scientist.) Early research was productive: a severely paralyzed, locked-in patient could move a computer cursor with this interface. However, after experiments in which five patients died or experienced severe suffering during research, the U.S. Food and Drug Administration (FDA) refused to approve further implantation of electrodes in human brains in Kennedy’s experiments. After analyzing the old data for a long time, Phil came to an impasse: he did not have sufficient data, funding was scarce, and the ethics of the experiment were not justified. Then, he made the decision to experiment on himself. “This whole research effort of 29 years so far was going to die if I didn’t’t do something,” he said. “I didn’t’t want it to die on the vine. That is why I took the risk.” However, the side effects of this experiment could be huge. When his skull was opened, Phil was at risk of dying at any time. During the twelve hours of surgery, Phil’s blood pressure soared up, even causing temporary paralysis. However, Kennedy said: “I wasn’t the least bit scared. I knew what was going on. I invented the surgery.” (He sounds confident, but again, also like a mad scientist.) The result was that Kennedy collected some truly precious data, and cyborg research (beings with both organic and biomechatronic parts) continued. Dating back to 605 BCE, self-experimentation has a long history. One early example of self-experimentation is a king’s diet change, found in the Bible. Daniel, a Jewish prisoner, and his colleagues were granted positions in the government of Nebuchadnezzar. However, they could not accept the king’s diet of wine and meat. When they suggested that the king modify his diet to a more vegetable-based one, local people doubted whether the new diet could support daily activities. Daniel and his colleagues tried the new diet themselves for two weeks, and proved to others that it was indeed healthier than the established one. Like in this case, many self-experiments focus on “melt experiments,” which do not expose the subject to high risks. Later self-experimentation in medicine and biology involved more danger, indeed. Operated with the will of a professional scientist, self-experimentation could include long-term or high-risk experiments, offer very detailed data, and often yield amazing results. For example, Sanctorius of Padua weighed himself, his daily solid and liquid intake, and combined excretions for over thirty years, leading him to the discovery of metabolism. So, should self-experimentation be extolled? Should selfless sacrifice be promoted? For me, no. One concern is the accuracy of the experimental results: self-experimentation, as a single-subject scientific experiment, is not completely objective. The subject has already been well-informed of all of the theories of the experiment and has his/her own expectations for results. Also, it is not easy for any human to prove his/her own postulate wrong. From another angle, we might consider the well-being of the scientists themselves. Scientists already are a group of people with outstanding persistence and a strong desire to solve problems. We should not expect more from them. In other words, even if we know that self-experimentation is more effective than regular experimentation, we should not put more stress on them to conduct it. If we extoll and promote self-experimentation, more scientists would do this due to peer pressure and reputation. They have already devoted their energy to science, and they do not have the responsibility to devote their life.

0 Comments

By Tiago Palmisano

Edited By Bryce Harlan American football is currently undergoing a metamorphosis. The rules, from what constitutes a foul to the distance of an extra-point field goal, seem to fluctuate more than in any other major American sport. And over the brief span of a few years, our conception of the football player seems to have changed as well. A football player is a warrior, the modern-day Hercules, the quintessential conqueror that overcomes any challenge with a display of strength and endurance. But unfortunately, he is not as unbreakable as we used to believe. Chronic traumatic encephalopathy (CTE), for example, is now infamous as a major risk of playing American football. Sadly, new studies demonstrate that the heart may also be a victim of this extremely popular game. Dr. Jeffrey Lin (a cardiac imaging fellow at Columbia University Medical Center) recently presented a portion of his research data at the annual meeting of the American Heart Association. The data, obtained from 87 freshmen, revealed that the systolic blood pressure of collegiate football players increases significantly as the season progresses. Additionally, the thickness of the heart muscle was also significantly larger at the end of the season than at the start. Thickening of the heart muscle is associated with decrease in heart function (it’s counter-intuitive, I know) and so this last finding is not a positive one. These conclusions point to the fact that American football players are at increased risk for hypertension (high blood pressure), despite having above-average athleticism. Although preliminary data such as Lin’s should be taken with a grain of salt, a 2013 study by researchers at Stanford’s medical school arrived at similar results. An interesting finding by both of these investigations is that among the different positions, linemen exhibited the most severe increase in blood pressure. Unlike many other sports, the physique of an American football player varies widely by their specific role. Defensive backs, running backs, and wide receivers are typically lean with low body fat percentages. Quarterbacks usually have a balanced build, and tight ends are slightly more beefy than other receivers. Linemen, on the other hand, have a different physique entirely. These players consist of the offensive and defensive personnel closest to the line of attack, and they are typically the largest and heaviest on the team. Just look at the average NFL running back next to his team’s center and this difference is obvious. This is not to say that linemen are unfit. Ali Marpet sprinted 40 yards in less than 5 seconds at the 2015 NFL combine, and he weighed in at 307 pounds. Yet, despite their superman abilities, linemen are at a greater risk for cardiovascular disease than other players. The 2013 Stanford study illustrates that linemen develop the largest increase in heart wall thickness over the season compared to players in other positions, even when accounting for the differences in body size. Lin’s data corroborates this fact. Of the 87 players in Lin’s study, none had hypertension at the beginning of the season. By the end, however, thirty percent of the linemen had developed high blood pressure, compared to just seven percent of the other players. Lin speculates on the reason for this stark variance, suggesting that the task of linemen during games and practices is not conducive for a healthy heart. Linemen are rarely required to run for more than a few seconds on any given play, instead having to exert tremendous amounts of energy in short bursts as they block or tackle at the line of scrimmage. A focus is therefore placed on anaerobic exercise, which can lead to hypertension if not accompanied by cardio-based aerobic exercise. It is indeed difficult to change the perception of a game that is so embedded into American culture. But we must continue pursue scientific investigations if we want to protect the players from the dangers of football. The brains and hearts of players are of the utmost importance. Efforts are now being made to combat the risks of CTE, and Will Smith’s movie Concussion will hopefully improve its awareness. We should be careful to consider that the heart may need a defense of its own. By Kimberly Shen

Edited by Arianna Winchester On August 12, 1904, three hundred rounds of cannons were set off to announce the birth of the first son born to the current royal family in St. Petersburg, Russia. This birth was particularly special for both the Russian royal family and Russian people since they finally had a new heir to the throne. Russian law dictated that only a male could inherit the throne, so Alexei Nikolaevich became the only child who could rule over Russia after his father, Nicholas II, died. However, the joy that Nicholas II and his wife experienced soon turned into horror when they found out that their only son was born with Hemophilia B. Those born with hemophilia lack clotting factors in their blood, so even a small injury can lead to severe bleeding or death. Hemophilia B is a recessive sex-linked disorder that is more frequent s in males because the gene for this disorder is only found on the X chromosome. Males only have one X chromosome, so to be diseased a boy would need to inherit only one mutated X chromosome with the gene for hemophilia from their mother. Hemophilia B is much less common in females because a girl would have to inherit two mutated X-chromosomes to be diseased. The disease is recessive, so if a girl is born with one mutated X chromosome and one normal X, she will only use her healthy X and therefore will not demonstrate symptoms of hemophilia. Women who have one healthy X and one mutated X are referred to as “carriers” because they have the capability to pass on the disease to offspring. Alexei’s condition was a constant source of anguish for his parents, especially his mother, Alexandra, who felt extremely guilty for passing on the condition to her only son. Alexandra’s grandmother, Queen Victoria of England, was also a carrier who passed the trait on to a few of her children. Several other members of Alexandra’s family died due to complications from this disease. Knowing that even the smallest injury could potentially lead to death for Alexei, both Nicholas and Alexandra became painfully aware that Russia’s future was uncertain. Nicholas and Alexandra chose to keep his condition a secret from most of their subjects. When he was three years old, Alexei suffered an injury that left him on the brink of death. In desperation, his mother asked a close confidante to secure the help of a mystic named Grigori Rasputin. What followed seemed to be nothing short of a miracle. At a time when even the best doctors in Russia were unable to Alexei’s condition, Rasputin was able to make his bleeding stop. Even up now, the nature of Rasputin’s healing methods remain a mystery. Some historians believe that Rasputin hypnotized Alexei. Others believe that Rasputin stopped administering anti-clotting drugs that may have been aggravating Alexei’s condition. Regardless, Alexandra saw Rasputin as a holy savior for her son. As a result, Rasputin was given legal privileges and easy access to the royal family. He even advised the Emperor and Empress on political affairs and filled government posts with his own supporters. This often led to disastrous political results, but the Empress remained blind to Rasputin’s faults since she saw him as the only person who could save her son. Ultimately, Rasputin’s influence over the royal family helped lead to their downfall. Because very few people knew of Alexei’s condition, most people could not understand why a commoner like Rasputin had such influence over the royal family. Rasputin’s power in court angered nobles and commoners alike. Rasputin was ultimately assassinated at the hands of a group of Russian nobles, two of whom were relatives of Nicholas II. Less than a month before his death in 1916, Rasputin wrote a last letter to Nicholas II. In this letter, Rasputin seemed to foresee his own death, writing: “If I am murdered by boyars, nobles, and if they shed my blood, their hands will remain soiled with my blood… Tsar of the land of Russia, if you hear the sound of the bell which will tell you that Grigori has been killed, you must know this: if it was your relations who have wrought my death then no one of your family, that is to say, none of your children or relations will remain alive for more than two years. They will be killed by the Russian people.” Eerily enough, Rasputin’s predictions came true. A little over a year later, Nicholas and his family were imprisoned in Yekaterinburg and executed by Russian revolutionaries. For many years, it was rumored that thirteen-year old Alexei may have survived the execution. Still, many people considered his survival highly unlikely due to his medical problems. The truth was finally revealed in 2007, when human remains were discovered in a forest in Yekaterinburg. After extensive DNA testing, it was ultimately confirmed that they were the remains of Alexei, the last heir to the throne of Russia. Written by Dimitri Leggas

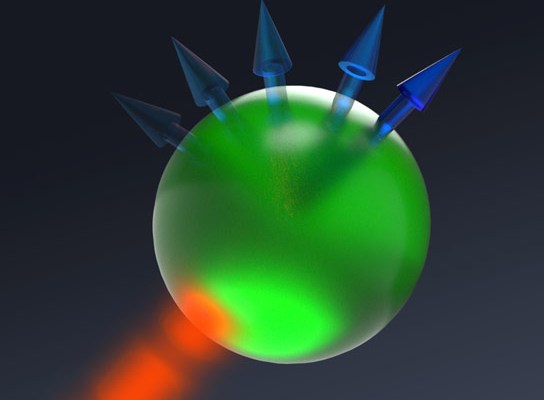

Edited by Hsin-Pei Toh The allure of quantum computing has not worn off since mathematicians and physicists like Yuri Manin and Richard Feynman theorized the field in the 1980s. Quantum computing promises rapid speed-ups in computations that can be parallelized, with potential impact on data security, as well as the financial and health industries. Currently, the development of functional quantum computers is at an early stage. However, recent advances made by a team at the University of New South Wales (UNSW) have shown that quantum logic gates can be constructed in silicon, an industry standard material for computer chips and transistors. The theoretical increase in speed derived from operating a quantum computer stems from the ability to store exponentially more information in a quantum bit, or qubit, than in a standard bit. Modern digital computers are based on series of transistors, which control the flow of electrons to define two possible states for a bit: 0 or 1. Logic gates, systems that take two inputs and return a single output, form the basis of digital circuits. In the quantum arena, qubits are transistors that are reconfigured to hold a single electron, and the spin of this electron determines the state. Unlike bits, a qubit’s state is not one of two definite states, but rather may be in a superposition of states. Suppose one has three qubits. The state of those qubits is a probability distribution of the 23=8 possible states: 000, 100, 010, 001, 110, 101, 011, 111, whereas in a classical computer the state of the bits is precisely one of those options with probability 1. Despite the dramatic increase in information storage capacity, there are inherent drawbacks to storing information in this way. Since the states are non-deterministic, there is no guarantee that an algorithm returns the same answer to a problem each time it is solved. So a process must be continuously repeated in order to determine if the answer found is correct. Additionally, the superposition of states is only beneficial if data can be processed in parallel, meaning that each step does not rely on the previous. If a certain process is mostly sequential, quantum computations are not faster than classical ones. Quantum processes rely on the entanglement of qubits, which until recently had only been produced in expensive materials like diamonds and cesium. Researchers Menno Veldhorst and Andrew Dzurak, researchers at UNSW, have improved existing industry technologies to entangle two qubits in silicon transistors by using microwave fields and voltage signals to force the two qubits to interact with one another, so that the state of second qubit depends on that of the first. In the logic gate built by the UNSW team, there is a target qubit and a control qubit. The target qubit maintains its original spin if the control qubit points upward (value of 1), but changes its spin if the control qubit is pointing downward (value of 0). Veldhorst says that extrapolating from this two-qubit gate will allow any size gate set to be constructed, making an incredible array of quantum computations possible. In addition to the development of the two-qubit gate, the UNSW scientists have developed a method by which the number of qubits can be scaled to millions, leading to the development of the first quantum computer chip. Despite these significant developments, one serious drawback remains. The quantum logic gate built at UNSW cannot function at room temperature, since it must be cooled to 1 Kelvin (or -458 degrees Fahrenheit). Nonetheless, Veldhorst and Dzurak remain optimistic because of recent progress made in cooling and refrigeration. The possibility, or perhaps inevitability, of quantum computers is promising. However, quantum computing will change how humans approach problems in fields like cryptography. For example, the Rivest-Shamir-Adleman (RSA) cryptosystem, a cryptosystem for public-key encryption, is highly dependent on the long time duration required to factor large numbers into primes. In RSA, a public key consisting of two large primes is used to encrypt data, and a private key is used to decrypt the data. If one does not know the private key, then they would have to factor a large number to decrypt the data, which currently is a practical impossibility. But with the powerful parallel potential to be found in quantum processors and Shor’s algorithm, a quantum algorithm for factoring numbers using parallel processes, potential hackers could easily render RSA useless. This small example in cryptography reflects the incredible impact of quantum computing on our daily lives. While quantum computers may not be necessary for the everyday consumer needs of most individuals, they will nonetheless change underlying processes that concern our every decision, in particular when using the internet. Written by Jack Zhong

Edited by Josephine McGowan Cars are an essential part of modern American life. Yet, unfortunately, they are also one of the primary sources of pollution that contributes to climate change. As a result, manufacturers have begun to introduce more fuel-efficient cars and electric cars. Electric cars ingeniously utilize battery power or other forms of electrical energy as fuel, rather than the quickly depleting and environmentally harmful fossil fuels. However, the adoption of the electric car as a consumer norm has not caught on as quickly as expected. Customers who consider buying electric cars are still wary of the potential limitations of electric cars. For instance, many consumers worry about the limited driving range of electric cars and the charging time. Indeed, public charging stations are not readily available in many areas across the country, and the charging jacks provided do not always match. If one were to “run out of juice” in an electric car in a remote area, it would be very difficult to resolve this situation. As a result, customers also tend to purchase what they view as the “safer” alternatives of hybrids or diesel vehicles. For instance, the Toyota Prius (Figure 1) is one popular hybrid model, but many other models are now available. These alternatives are attractive to buyers concerned about the environment because diesel and hybrid vehicles have better gas mileage than their conventional gasoline burning cars. In addition, unlike electric cars, both diesel and hybrid vehicles can be easily refilled at the gas station. Further, consumers believe gasoline cars have better performance in handling and acceleration. However, despite these views, it is undeniable that electric cars offer many environmental and performance advantages that should far outweigh the inconveniences. Though the evidence of the environmental advantages of electric cars is strong, many consumers believe electric cars impacts are not as innovative as they are made out to be. The argument goes: “even if the car itself does not produce emissions, the electricity to power the car comes from coal and gasoline burning plants; we are still destroying the environment with greenhouse emissions.” Yet, this argument fails to acknowledge that although the electric energy does come from a power plant, a power plant is much more efficient than a car’s engine in burning and extracting the gasoline’s energy. The gasoline car engine loses a lot of energy in the form of heat in the process of burning gas to drive the pistons. The power plants that generate electricity for electric cars also burn gas. Yet, the large turbines of the electric power plants waste less heat, and secondary heat sinks at the powerplant collect “extra” heat that is reused for power generation. Furthermore, compared to gasoline cars, electric cars are much more efficient because the electric motors are better than gasoline engines in converting stored energy into motion. The electric motor “converts more than 90 percent of the energy in its storage cells to motive force, whereas internal-combustion drives utilize less than 25 percent of the energy in a liter of gasoline. (Source A)” Another energy saving feature of electric cars is that electric motors do not need to stay running while the car is idle. Breaking can also regenerate electric energy to power the car. Overall, the owner of an electric car will spend less money to put electricity in the car, and will use much less fossil fuel to power their vehicles. In addition to environmental advantages, electric cars have convenience advantages. Plugging in the car at night would mean no more gas station visits. In some electric vehicles made by Tesla Motors, the owner can remotely turn on the air conditioner before the driver enters the car, which is quite a perk during the summer. This would not be possible with gasoline cars, as running the A/C will drain the battery needed to start the engine. If the driver does have to go the distance, Tesla Motors has established “supercharging stations,” where cars can be charged quickly (Figure 2). The Tesla superchargers are spread strategically to cover a large area, and they charge half the battery in about 30 minutes. A fully charged Tesla Model S P90D sedan can travel about 273 miles (Figure 3). Eventually, Tesla plans to use solar panels to power the superchargers and produce extra power to put back into the grid. Although superchargers only work with Tesla vehicles as of now, the company is open to including cars of other manufacturers in the future. In terms of driving performance, electric cars actually have an advantage in acceleration over gasoline cars. The force of wheel rotation that the engine generates is known as torque. Electric cars now have improved performance because electric motors have more torque than gasoline motors when the cars begin to accelerate. This means that the wheels would move faster more easily, while using less energy. In fact, the Model S P90D has a 0-60mph time of 2.8 seconds. For comparison, a Ferrari 458, an ultra-expensive gasoline-powered sports car, has a 0-60mph time of 3.0 seconds. Electric cars also handle turning and maneuvering quite well because the battery pack can be designed to be low to the ground, which reduces the center of gravity and improves the car’s weight distribution. Despite their advantages, however, electric cars are not perfect. For one, because they utilize novel technologies, they’re quite expensive. Yet, with mass production and technology improvements, electric cars should be much cheaper within five years. As we face crises of global warming and the decreasing supply of gasoline, we must find better ways to use our resources. Given that electric cars are more environmentally friendly and offer great performance, companies should invest in ensuring that they are further improved. Consumers should support the incorporation of electric cars by spreading awareness of their benefits and purchasing electric cars. Overall, electric cars hold great promise as the transportation of tomorrow. By Julia Zeh

Edited by Ashley Koo Exactly one hundred years ago, in November of 1915, Albert Einstein solidified his field equations of the general theory of relativity. Despite the passage of a century, both theories still stand and are still incredibly relevant in modern research, making November 2015 a month to celebrate Einstein’s work. Einstein’s theories of special and general relativity have been fundamental in advancements in physics and cosmology over the past century. These theories were revolutionary in the way they broke from classical Newtonian physics, changing the way scientists think about and define time, space, and mass. Part of what makes Einstein’s theories so incredible is how they came to be. Einstein used thought experiments to look at scientific problems; by imagining certain situations he was able to uncover vital physical ideas about the universe. One of Einstein’s most famous thought experiments involved the constancy of the speed of light. At age 16, an age when most people are spending their time thinking about the perils of high school rather than the implications of Maxwell’s equations, Einstein was imagining traveling at 300 million meters per second (the speed of light) alongside a beam of light. (Maxwell’s equations describe the electromagnetic behaviors that lead to the production of light.) According to classical Newtonian mechanics, light would appear to be standing still. Experimental results and Maxwell’s equations, however, suggest otherwise. The teenage Albert Einstein then concluded that the speed of light must be constant, and not relative to anything. This thought experiment led him to further thought experiments and to the special theory of relativity. Light is particularly interesting because its velocity is not actually relative to anything else. Einstein’s theories of relativity operate on the basis of the fact that all velocities are relative, an idea that dates back to Galileo. For example, if someone were to run while inside a bus in motion, they would be running relative to the frame of reference of everyone else on the bus. The velocity of the runner is observed differently by people also on the moving bus and people at rest on the street outside the bus. Special relativity is derived from the idea that all velocities are relative except the speed of light, which is always constant. The theory of special relativity describes a special case of motion where velocity is constant and there is no acceleration. Simply put, special relativity proposes that space and time are not absolute, as was previously thought; they vary from observer to observer. In certain situations, observers see objects in motion getting smaller in their direction of motion and time running slower on clocks in motion. These effects stem from the constancy of the speed of light. Therefore, everything is relative -- there is no “correct” observation of things in the universe. Time dilation and length contraction are real effects, but because human observations concern a very small range of speeds, lengths, and times within the scale of objects in the universe, we don’t see the effects of time dilation and length contraction in our daily lives. Space and time are not absolute and different observers see different lengths and times depending on their motion. Thus velocity, mass, and distance all impact time and space, as the theories of special and general relativity imply, and so scientists describe a single fabric of the universe, namely spacetime. Briefly put, time is fundamental in describing events and thus is considered a fourth dimension, which is described alongside space. Einstein also came up with general relativity, the theory which we celebrate this month. General relativity is a more general form of his special theory (special relativity), and it applies to acceleration and to gravity. Gravity is a unique force: it is vastly weaker than the other physical forces (electromagnetic, strong nuclear, and weak nuclear) and acts at huge distances. Einstein was able to discover that gravity is also unique because it warps spacetime, and this is described in his general theory of relativity. The easiest way to think about gravity’s effects and the warping of spacetime is by imagining a large sheet with a bowling ball at the middle. If the sheet is held up off the ground, the bowling ball pulls it down in the middle, changing its shape. If other objects are then placed on the sheet, they are attracted to the bowling ball at the middle because of the shape of the sheet. Any other round objects placed on the sheet would roll towards the middle because of this warping effect. This is analogous to the effect that matter has on the fabric of space and time. General relativity has a multitude of implications for the Big Bang, for black holes and for other aspects of the universe. Although the theory is now one hundred years old, it is still remembered and honored. What is particularly remarkable is that Einstein generated ideas that nobody else could at the time. These ideas also may not have seemed entirely rational, given how radically different they were from the established laws of classical physics. However, Einstein’s ability to think creatively and innovatively and to challenge preexisting notions of space, time and classical mechanics is an ability that is essential to scientific discovery. By Kimberly Shen

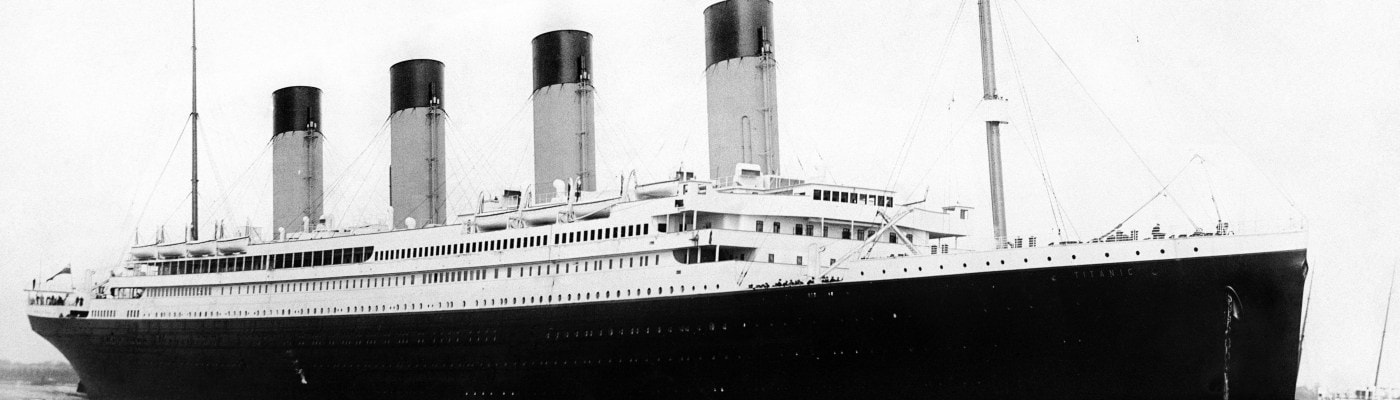

Edited by: Arianna Winchester Though we may think we know everything about the destruction of the Titanic over a century ago, new scientific developments are enabling us to find out new information about what actually happened on that tragic night in 1912. Modern methods of mitochondrial DNA testing has been able to tell us exactly who was on that boat and if they were able to survive the sunken ship or not. Two year-old Loraine Allison sailed on the Titanic as a first-class passenger along with her parents, some of their servants, and her baby brother, Trevor. Shortly after the ship struck the iceberg, the family maid and Trevor were able it to make it to one of the lifeboats. However, Loraine and her parents got lost in the disaster. Loraine’s father’s body was eventually discovered, but the bodies of Loraine and her mother were never found. Loraine was presumed dead for the next 30 years. In 1940, a woman named Helen Kramer was featured on a radio show to claim that she was the long-lost Loraine Allison. She said that just before the Titanic sank, her father saved her placing her in a lifeboat with the Titanic’s naval architect, Thomas Andrews. After learning that Loraine’s parents died in the wreckage, Andrews took on the role of an adoptive father. Kramer desperately tried to convince the public of her supposed identity and subsequently tried to claim the Allison family fortune. However, the members of the Allison family dismissed her claims as fraudulent. Kramer ultimately passed away in 1992, unrecognized as baby Loraine. Nevertheless, in April 2012, Debrina Woods, Kramer’s granddaughter, rekindled her grandmother’s campaign by launching a website again claiming that her grandmother was indeed the long lose Loraine Allison. As a response, a group of forensic scientists began the Loraine Allison Identification Project to compare mitochondrial DNA from female descendants of Kramer to descendants of the Allison family. Since mitochondrial DNA is inherited through the mother and generally stays the same from one generation to the next, mitochondrial DNA is a great tool for tracing ancestry through females. Mitochondrial DNA was extracted from Debrina’s half-sister, Deanne Jennings, and compared to that of Sally Kirkelie, who was the grand-niece of Loraine’s mother. In December 2013, the forensic scientists of the Loraine Allison Identification Project said that their mitochondrial DNA testing proved that Helen Kramer was not Loraine Allison. Thus, although Loraine’s body was never recovered, these results seemed to conclusively prove that she did not survive the sinking of the Titanic. Even though the results of the mitochondrial DNA testing disproved Helen Kramer’s claims, Debrina Woods still continues her campaign to this day. In her most recent post in February 2015, Debrina said that she remains committed to completing a book and screenplay about her grandmother’s story in order to bring the “real truths” to light. David Allison, the grandson of Loraine’s uncle, responded to the results of the mitochondrial DNA testing and Debrina’s continued campaign, saying that, “The Allisons never accepted Mrs. Kramer’s claim, but the stress it caused was real. It forced my ancestors to relive painful memories.” His sister, Nancy Bergman, agreed. Bergman thought that Kramer’s old argument and Woods’ willingness to keep that alive is “all about money…Debrina wants to write a book and no doubt there are others out there who want to profit from our story. It is our story. Leave us in peace,” she begs. By Yameng Zhang

Edited By Thomas Luh On October 26th 2015, the International Agency for Research on Cancer (IARC), the cancer agency of the World Health Organization, released a monograph (a report discussing a subject in detail) evaluating the carcinogenicity of red meat and processed meat consumption (whether they are directly involved in causing cancer). According to the report, “processed meat was classified as carcinogenic to humans (Group 1); red meat was classified as probably carcinogenic to humans (Group 2A).” The experts concluded that each 50 gram portion of processed meat eaten daily increased the risk of colorectal cancer by 18%. The moment you see this report, you may feel scared, since meat consumption might take up a significant portion of your daily diet. I felt the same. However, after I closely looked into details of this research and its following reports, I closed the website, smiled and headed to Lerner Hall to grab my regular breakfast. To better understand this “meat threat,” we need to know more about the classification used by the IARC monograph. There are four groups, but here we only talk about the two groups related to this case. Group 1 indicates “carcinogenic to humans,” which means that there is sufficient evidence to prove that this agent causes cancer in human beings. Group 2A indicates “probably carcinogenic to humans,” which means that there is only limited evidence of carcinogenicity in humans (but strong evidence in experimental animals or mechanisms in humans). Do you think that agents classified in Group 2A are extremely dangerous, and definitely not safe to eat/touch/smell? The truth is, we need to be careful when interpreting this classification. This classification only indicates whether the agent causes cancer in human beings; in other words, it indicates whether there is a connection between the agent and cancer. Neither the intake (how often/how much you are exposed to this agent would cause cancer) nor the risk (the possibility that the cancer really happens; level of hazard) are included. I will provide you with some examples. Outdoor air pollution is in Group 1, but most people in China and India are still healthy, despite the severe air pollution. Solar radiation is in Group 1, but most human beings on earth do not have cancer due to solar radiation. Ethanol, found in alcoholic beverages, is also in Group 1, but many people casually drink. If hypothetical research found that drinking 1200 gallons of water a day was carcinogenic, then water would also be categorized into Group 1. So, does the report seem less scary now? I will tell you something even more reassuring: of the 22 members in the IARC who voted on the final conclusions, seven either disagreed or chose to abstain. According to James Coughlin, a nutritional toxicologist and a consultant for the National Cattlemen’s Beef Association, this lack of complete agreement rarely ever happens. “The I.A.R.C. looks for consensus, and occasionally there’s one or two people who disagree. We’re calling this a majority opinion as opposed to a consensus or unanimous opinion.” Thus, though the monograph was released, it is not fully supported by every member of the team who released it. Some experts believe that there is only weak association, rather than absolute connection, between meat consumption and cancer. When I examined the follow-up report relating to people’s reaction to this monograph, I found something interesting. According to a Forbes survey, the meat industry was not affected—sales did not go down, and there was no call from consumers to processed meat companies to raise their concerns. While “meat and cancer issues” are posted on our Facebook feeds everywhere, as if they were the most-important-thing-ever-to-happen-on-earth, most people just do not change their behaviors. They still eat what they have regularly eaten, or they just have what they like. This issue is just like the smoking issue. On every cigarette box, there is a printed notice that smoking is not good to your health, but the people smoke cigarettes anyway. Maybe with a little bit of guilt, but that’s it. Most people do not like change. With numerous in-progress research projects today examining the “healthy life style”, how many really affect peoples’ lives? People who want to change and lead a healthy life are continuously modifying their lifestyle, but other people always stay the same. No matter what, people who care for their health will always be healthy; people who do not care will always be unhealthy. The percentage of healthy people on Earth will not be affected by those “do not eat this, do not eat that” report. A personal note here: spend more time, money and energy on medicine rather than healthy lifestyle reports. By Julia Zeh

Edited by Ashley Koo Deep in the Eastern Himalayas, isolated from human activities, members of a newly discovered species of snub-nosed monkey sit with their heads between their knees, hiding from the rain. The recently discovered Rhinopithecus strykeri, a member of the snub-nosed monkey genus, is described as having black fur, an upturned face, fleshy lips, and an almost nonexistent nose. In fact, its nostrils are so wide that when it rains the monkey frequently gets water up its nose and starts sneezing. These monkeys will put their heads between their knees in order to keep the water from getting up their noses during the rainy season. Scientists have used this unusual character trait of the monkeys to track them in the wild; they follow the sound of sneezes to find the creature that they have nicknamed “Snubby.” Unfortunately, no photographs exist of this unique, new species of monkey. Although scientists have observed them in the wild, photographers have not yet been successful in capturing the monkeys on film. Instead, the only photographs scientists have is of a dead individual caught by hunters, which was then eaten. Local demand for this species is high, and thus it represents a conservation message. Scientists estimate that there are only about 300 individuals left. R. strykeri has qualified for the characterization of “critically endangered” on the International Union for Conservation (IUCN) Red List. This discovery was very recent and yet the scientists found the species at a population level that qualifies it for critically endangered status. This poses a warning for the wellbeing of all species in such a biologically rich area. The snub-nosed monkey is one of the 211 new species discovered in the Eastern Himalayas over the past five years. This includes 133 new plant species, 39 invertebrates, 26 fish, 10 amphibians, one reptile, one bird, and one mammal ( the snub-nosed monkey). The Eastern Himalayas are one of the most biologically rich and diverse places on Earth and are a vital habitat for thousands of organisms. According to the World Wildlife Fund, the ecosystems of the Himalayas are home to at least 10,000 plant species, 300 mammals, 977 birds, 176 reptiles, 105 amphibians, and 269 freshwater fish. Of the organisms that live in the region, 30% of the plant and 40% of the reptile species are unique to this area alone. The Eastern Himalayas are a haven for biodiversity, teeming with life, and there is much still to be explored. The 211 new species demonstrate the fascinating, unique organisms living in this biodiversity oasis and imply that the age of discovery is not yet over. Among the 26 new fish species discovered was Channa andrao, a walking fish. C. andraois a long snakehead fish with vibrant, bluish scales, making it distinguishable from other snakeheads. Snakehead fish have gills, but they are still able to breathe air. In fact, this species of snakehead can breathe air for up to four days and can “walk” on land for up to a quarter of a mile, given that the ground is wet. This adaptation is important if the fish’s habitat dries up. When this happens, the fish can survive on land, breathing air, until it rains or the fish can make its way to another source of freshwater. These fish are known to be aggressive and can grow up to 1.2m long. Though we may be in a post-biodiversity era of sorts, this does not spell the end of discovery. In an age that feels saturated with human knowledge about the natural world, it comes as a shock that so much exploration is still possible. Yet, as we reach out to the stars and the outer reaches of the universe, at the same time we are still sending expeditions to the far reaches of the earth. Despite our apparent dominance on this planet, there still exists a multitude of life about which we have little to no knowledge or understanding. Written by Dimitri Leggas

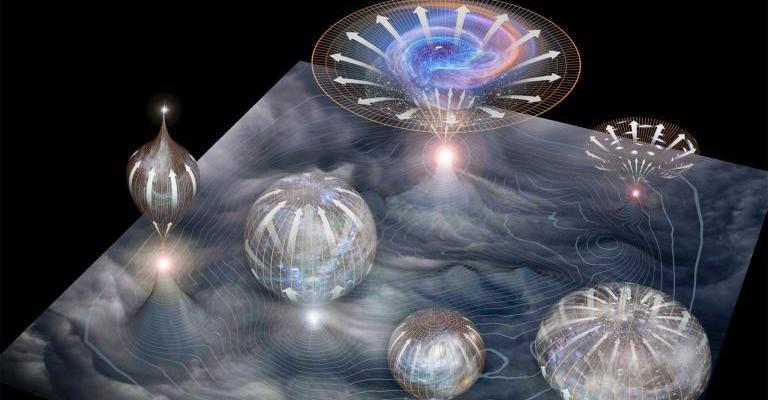

Edited by Hsin-Pei Toh Today’s popular scientific discourse is filled with discussions on “the multiverse,” with headlines like “The Case for Parallel Universes” and “Looking for Life in the Multiverse” filling journals such as Scientific American. Many of these articles focus on the excitement of numerous universes rather than their theoretical underpinnings. The idea that infinitely many of “you” exist is impressive, if daunting, and the idea of a multiverse has become increasingly juxtaposed with divine creation. But to accept the multiverse may come at the cost of a wider scientific understanding of our universe as well. It is well known that slight adjustments to any fundamental physical parameters would drastically change our universe, more particularly our solar system, making life as it occurs on Earth impossible. Take for instance the force of gravity. Were gravity only a few percentage points stronger, the sun would have burned out in under one billion years, eliminating any possibility for the evolution of complex life. On the other hand, if gravity were a few percentage points weaker, the center of the sun would not reach the temperatures necessary to catalyze the nuclear reactions that produce sunlight that most life relies upon. A host of examples like these suggests that our universe is somehow a special place: one that is able to accommodate life hospitably. In 1973, Brandon Carter, a British physicist, offered an explanation in the form of the anthropic principle, which states that the parameters of our universe are fine-tuned for life as we experience it, precisely because we are here experiencing it. Still, the anthropic principle does not examine whyfundamental quantities in physics take the values that they do. That is, why is the gravitational constant 6.67408 × 10-11m3kg-1s-2 and not another number that would make life as we understand it impossible? Adherents of Intelligent Design and Creationism seize the scientific recognition of fine-tuning in order to proselytize religious ideology. They contend that these coincidences that make life possible point to our creation by a superior being. Scientists are not eager to accept these claims. After all, if countless universes existed, each with different parameters, then it would come as no surprise that one with our “fine-tuned” settings will exist as well, according to the laws of probability. According to Tim Maudlin, a philosopher from NYU interested in cosmology, most physicists don’t use the fine-tuning argument, but instead rely on mechanisms within physical frameworks to explain the multiverse. Two such theories are inflation and string theory. He proposed a rapid expansion of the universe, due to an energy source like dark energy, in the first trillionth of a trillionth of a trillionth of a second. This theory explains the uniformity of our universe on a large scale. A revised form of inflation called eternal inflation argues that the dark energy source for inflation is not uniform, but “marbled.” One corollary of eternal inflation is that many big bangs occur, so many universes come into being. The notion of a multiplicity of universes finds acceptance in string theory as well. String theory aims to bridge gaps in theory between general relativity and quantum mechanics. Proponents of string theory argue that in addition to the three conventional spatial and single temporal dimensions, an additional six spatial dimensions exist. Mathematics predicts an extraordinarily large (10500) number of possible ways to fold these dimensions, which would be small and tucked into the more familiar ones. Each of these options corresponds to a different universe. Some scientists are quick to accept the multiverse as an explanation for the anthropic principle. In fact, it is common to accept un-testable predictions of well-tested theories. For example, scientists generally accept ideas about what occurs inside a black hole since general relativity has been repeatedly tested and verified. Because string theory makes predictions at small scales, it remains untestable and not falsifiable. However, many scientists are satisfied with the accuracy of predictions made by inflationary theory and take very seriously the prediction of a multiverse. Often, the mathematically simplest theories, however ontologically absurd they may seem, suggest a multiverse, whereas a great deal of mathematical maneuvering is necessary to yield a single universe. While a multiverse may seem mathematically appealing and impressive for its sheer vastness, it would change current philosophical approaches to theoretical physics. Researchers would no longer be able to seek a single unifying theory that could elegantly describe everything, because our universe would behave completely differently from others. In a widely debated lecture, Stephen Hawking stated that philosophy is dead because it has failed to keep up with science, in particular physics. Tim Maudlin made the opposite claim, pointing out that physicists do not fully understand the relationship of fundamental constants to one another and how they might shift in order to accommodate changes in others. If it turns out that multiple universes do not exist, he argues that this should not point to intelligent design as the only remaining option. Instead, we may only need to look more deeply into the infrastructure our universe is built upon. |

Categories

All

Archives

April 2024

|