|

Written By Tiago Palmisano

Edited By Bryce Harlan Paleodiet, Atkins diet, vegan diet, raw food diet – new and interesting food management plans such as these constantly pervade the realm of fitness culture. Weight Watchers International, for example, has capitalized on this obsession by providing specific combinations of food in exchange for money. Some of these “discovered” diets seem extreme or outright ridiculous, and a few of them are actually backed by research studies. And one such counter-intuitive diet, based around the concept of caloric restriction, is slowly gaining credibility in the scientific community. The idea of caloric restriction (CR) is pretty much self-explanatory. As put by a 2014 article published in Nature, CR is “Reduced calorie intake without malnutrition.” In other words, caloric restriction involves eating a fraction (usually between 50 and 80 percent) of the normal amount of food consumed. An important stipulation is “without malnutrition,” which means that all of the essential organic compounds must be included. For a human, this is the equivalent of having a trained nutritionist design a meal plan in which all of the necessary nutrients and vitamins are provided in a very small number of calories. This is not the same as just skipping desert. Caloric restriction involves eating less than most people, even less than the typical healthy or lean individual. For example, imagine skipping every fourth meal and you can begin to imagine the reality of reducing caloric intake by twenty-five percent. A diet in which an organism approaches starvation is certainty counter-intuitive, and is indeed dangerous if done incorrectly. Therefore, the positive effects of caloric restriction are still not widely accepted, and its efficacy is currently the subject of research. Previous studies have found that CR increases lifespan and slows the onset of age-associated pathologies in organisms such as mice, fish, and worms. Remarkably, these animals were shown to live longer by simply eating a fraction of their normal food intake (without maturation, of course). The mechanism by which CR increases lifespan is unknown, but one potential reason is that consuming fewer calories reduces metabolism. A reduction in metabolism is accompanied by a reduction in reactive oxygen species (ROS), which cause biological damage and are thought to play a role in the aging process. As one study in The American Journal of Clinical Nutritionwrote, CR increases lifespan by slowing the “rate of living.” Although a decent amount of research exists for CR in lower organisms (such as mice), only a few studies have been published that examine its effect in higher mammals. In 2009, a study published in Sciencemagazine offered the results of a twenty-year study of caloric restriction in rhesus monkeys, a close ancestor of humans. At the end of this experiment, eighty percent of the monkeys on the CR diet were still alive, compared with only fifty percent of the normally fed animals. Additionally, the monkeys on the CR diet developed, on average, less cardiovascular disease, diabetes, and cancer. While such a result seems to suggest that caloric restriction would increase the lifespan of humans, there is still much scientific work that needs to happen before we can safely bring this diet outside of the laboratory. Other similar studies on CR in rhesus monkeys have not been as conclusive, and ethical dilemmas make it difficult to gather data for long-term CR in humans. However, some brave participants have tested the effects of significantly cutting down their food intake, albeit on a much shorter time scale. The National Institutes of Health released an article this September about a clinical trial in which healthy individuals curtailed their caloric intake by approximately twelve percent over a two-year period. The results were generally positive, with a decrease in risk factors for diabetes and heart disease and an increase in good cholesterol (HDL). Despite being counter-intuitive, the CR diet has started to leak into popular culture. A reporter for New York Magazine experimented with caloric restriction for two months in 2007 and detailed the experience in a feature appropriately titled “The Fast Supper.” There is even an international Caloric Restriction Society, which seeks to spread information of the benefits and guidelines of a CR diet. However, the scientific evidence for the effects of CR in humans is nowhere near airtight. We should wait for better and more substantial data before CR is endorsed as an option for a healthy lifestyle. For now, there are too many unknowns to justify a lifelong commitment to a potentially dangerous diet. Until science has caught up, it’s probably a good idea to stick to Weight Watchers.

0 Comments

Written By Jack Zhong

Edited By Josephine McGowan On October 6, 2015, the Nobel Prize for Medicine and Physiology was awarded to William C. Campbell and Satoshi Ōmura for “their discoveries concerning a novel therapy against infections caused by roundworm parasites.” Youyou Tu also shared this prize for “her discoveries concerning a novel therapy against Malaria.” While this esteemed award recognizes the contributions of these doctors against pervasive diseases, their similar approaches in combatting them are ingeniously intuitive. Indeed, they demonstrate that the best way to combat these diseases is not to synthesize treatments in the lab, but rather to derive natural remedies from the world around them. Their successful strategies and findings open up new possibilities for effectively curing widespread maladies. Parasitic diseases still plague mankind today as it has for thousands of years. Diseases range from river blindness to elephantiasis, scrotal hydrocele to malaria. The symptoms are as wide ranging as the diseases. Some cause blindness, while others lead to disabling symptoms. On a larger scale, parasitic diseases decrease living conditions for those suffering from them. Others lead to death. Although diseases like malaria seem do not seem to be a large problem in the US, parasitic diseases are quite prevalent and deadly in sub-Saharan Africa, South Asia, and South America. They affect vulnerable patient populations, who are often also impacted by hunger, water shortage, and military conflicts. ]In attempting to quell these issues, Dr. Ōmura and Dr. Campbell began by assessing natural products in the soil. Dr. Ōmura is an expert at isolation of natural products. A natural product is considered a chemical or mixture found in nature, rather than ones produced in a laboratory. Dr. Ōmura focused on a group of bacteria (Streptomyces) found in soil that has been known to produce antibacterial chemicals. Streptomyces was notoriously very difficult to keep alive in the laboratory, but Dr. Ōmura developed methods to grow these bacteria in laboratory conditions. Thus, Dr. Ōmura discovered and characterized new strains of the bacteria from soil and grew these bacteria. Of the thousands of strains, he picked the most promising ones, and analyzed the chemicals they produce. A strain that he found outside of “a golf course in Ito, Japan” would eventually produce an effective component called Avermectin that can treat a variety of parasitic worms such as intestinal nematodes. Collaborating with Dr. Ōmura, Dr. Campbell studied the cultures that Dr. Ōmura produced. Dr. Campell began by testing broths of dead bacteria on mice with parasitic infections. Dr. Campbell worked with Thomas Miller, a fellow researcher, to study the chemistry of the effective components. Dr. Campbell demonstrated the antiparasitic effects of the agents on domestic and farm animals. Using chemical techniques, Dr. Campbell modified Avermectin to Ivermectin, which was a more effective version. With little side effects on the host, Ivermectin is effective against various parasites and in both humans and animals. Ivermectin was used in clinical trials in 1981, successfully eliminating river blindness from patients. He found that Ivermectin acts through chloride channels found in muscles and nerve cells of the parasites; its administration leads to paralysis of the parasite in the host. The drug has effectively reduced levels of diseases caused by worm parasites, such as lymphatic filariasis and river blindness. Meanwhile in the 1960’s, Dr. Tu began developing a treatment for malaria, a deadly disease transmitted by mosquitos. She searched through historical documents of Chinese medicine for treatments against fever. Dr. Tu’s research group found that sweet wormwood appeared in hundreds of Chinese medicine recipes for treating fever (Figure 3). She initially found mixed results in treating rodent malaria with the extract from sweet wormwood. In response to this, Dr. Tu studied recipes of Ge Hong (340 A.D.) and discovered a cold extraction method to isolate the active ingredients from sweet wormwood. The results were very successful, as the drug Arteminsinin was discovered. Using Arteminsinin in combination with other anti-malarial drugs has been effective in significantly reducing malaria rates globally. The drug has been hypothesized to act through the Kelch 13 protein, which is related to delayed parasite clearance. Today, there tends to be the perception that many drugs we use now are synthesized and engineered by humans. Some believe that we are past the age of “traditional” medicine. Yet, over the years, the discoveries of these three scientists have saved countless lives from devastating parasitic diseases. The stories of their research processes and scientific methods are fascinating, and demonstrate that many of the most effective drugs can come from studying nature and medicinal remedies of the past. Dr. Ōmura and Dr. Campbell created Ivermectin from extracts of bacteria. With the help of old Chinese traditional medicine, Dr. Tu found Arteminsinin from the sweet wormwood. These Nobel laureates illustrate the power of optimizing what is already abundant in nature. There may be many more cures locked away in the soil, in the plants, waiting for us to discover them. Therefore, when searching for cures, we must not let hubris blind us from the wonders of nature. By Yameng Zhang

Edited By Thomas Luh What is herbal medicine? According to the University of Maryland Medical Center, Herbal medicine—also called botanical medicine or phytomedicine—refers to using a plant’s seeds, berries, roots, leaves, bark, or flowers for medicinal purposes. The Nobel Prize in Physiology or Medicine 2015 was awarded to a further application of herbal medicine, “concerning a novel therapy against Malaria.” In more detail, a Chinese scientist YouYou Tu found a highly effective chemical—Artemisinin, an extract from the plant Artemisia annua—which can inactivate Plasmodium falciparum(one of the protozoan parasites that cause malaria) in a complex way (including the inactivation of its mitochondria). Youyou Tu’s discovery is based on the close study of herbal medicine and guided by a thousand-year-old Chinese herbal medicine study, Zhouhou Jibei Prescription. Another memorable success in medicine history shares a great similarity with Youyou’s—the discovery of aspirin. Aspirin also found its own origin in a herbal medicine, willow. According to the Ebers Papyrus, an ancient Egyptian medical text, willow was recorded “as an anti-inflammatory or pain reliever for non-specific aches and pains.” In the mid-eighteenth century, scientists succeeded at extracting salicylic acid, the effective ingredients in willow bark, as a pain reliever. A decade later, chemists were able to modify and artificially synthesize this acid. Thus, aspirin was born and put into mass production. Herbal medicine has a long and solid history. Dating back to the second millennium BC, the Ebers Papyrus of Egypt begins to discuss a large number of herbal medicine, including cannabis, fennel, cassia, senna, thyme, juniper, and aloe. While reading Homer’s Odysseyfor my Literature Humanities class, I could find echoes of the Papyrus as well. Egypt was described as a land “where the fertile earth produces the greatest number of medicine.” There is also a specific line stating “she [Helen] cast a medicine of heartease, free of gall, to make one forget all sorrows.” After careful research, I discovered information about Ferula assa-foetida, a common herb in ancient Egypt used to calm hysteria and nervousness, which could be a candidate for the medicine from Helen. In ancient Rome, herbal medicine played a significant role in medical care, and the systematic analysis of herbal medicine had also formed. Of the two most important medical figures of Rome whose contributions to medicine remained the unparalleled, Dioscorides spent eighty percent of his most significant book De Materia Medicadiscussing herbal medicine. He analyzed herbal medicine in a categorized way for the first time, and lists the different feature of each herbal medicine orderly. This book became a solid scientific foundation for herbal medicine studies. In the Middle Ages, “the Muslim Empire of Southwest and Central Asia made significant contributions to medicine.” Moreover, thanks to several serious plagues at that time, dozens of herbal medicine study centers were built and herbal medicine education was promoted. During the Renaissance, many ancient classics about herbal medicine were reintroduced into Europe. However, the application of herbal medicine was not a popular practice in today’s medicine industry, considering the striking effectiveness of antibiotics. When I examined herbal medicine more closely, I was attracted by its long history, and felt such a pity that human beings would just abandon this well-developed medicine, which is a treasure indeed. Although herbal medicine has its own drawbacks, such as difficulties in transportation and preservation, the discovery of aspirin and Artemisinin exemplify a new way we can utilize our knowledge. Considering the high cost and instability of the original extracts from the herbs, scientists could apply chemical modifications to these natural extracts. After artificial modifications, mass production can be achieved easily. Herbal medicine, I believe, could offer numerous enlightenments for modern medicine. By Kimberly Shen

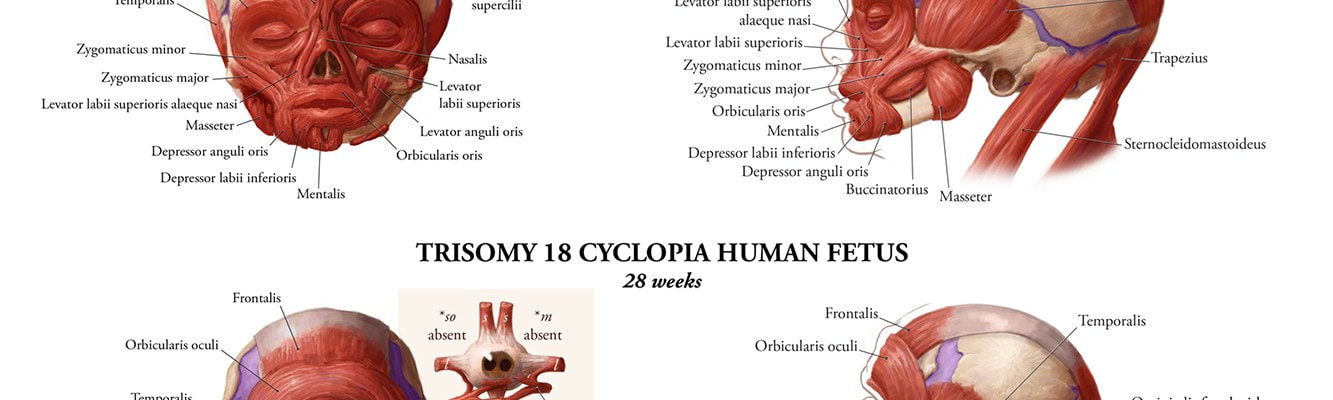

Edited by Arianna Winchester “Over the years that followed, I found myself thinking from time to time of that picture, my hand over the baby’s mouth. I knew then, and I still think now, that the right thing to do would have been to kill that baby.” These were the words that Dr. Frederic Neuman said when he reflected on his disturbing experience with who he called “the Cyclops Child.” Cyclopia is an extremely rare type of birth defect that appears when the embryonic forebrain fails to divide the orbits of the eyes. As a result, infants born with this disorder are generally missing an eye and a nose. During normal embryonic development, a structure called the neural tube develops into the nervous system. However, an embryo with cyclopia, has defects in their neural tube, so the brain, eyes, nose, and mouth fail to develop normally. Around 1960, Dr. Neuman, then a medical intern, helped deliver a baby with cyclopia at St. Vincent’s Hospital in New York City. Many years later, Dr. Neuman recalled the experience of seeing the child born with one eye in the middle of its forehead. Because the infant’s esophagus never separated from its trachea and led to its lungs instead of its stomach, the child was also unable to eat. Upon seeing the extremely deformed infant, the team of doctors that Dr. Neuman worked with decided not to tell the parents that their child was born with such a birth defect. Instead, they falsely informed the parents that their child had died at birth. Because infants with this disorder generally die soon after birth, the doctors and nurses expected this infant to die shortly. They abandoned the baby in the back of the hospital nursery and left the infant to starve. To their surprise, the baby lived for thirteen days. What makes this story even more disturbing was that an operational procedure was needlessly practiced on the dying infant. Under the orders of his senior resident, Dr. Neuman performed a finger amputation procedure on the infant without anesthesia. When he recounted this experience later, he recalled his horror upon realizing that the child could actually feel pain. He recalls that, “when [he started amputating the finger], it screamed…[his] hands began to shake. The kid was in pain. It could feel pain.” He regrets his actions, saying, “I should’ve realized that, but somehow I did not. It was because the baby did not really look like a baby.” When the baby passed away, Dr. Neuman recalled experiencing a combination of sadness and relief, saying that, “It was awful for the child. It’s hard to imagine a child that is not comforted when it is picked up. You can’t feed it when it’s hungry. You can’t do anything for it.” Dr. Neuman’s chilling story has raised many ethical questions both inside and outside the medical field. Many of Dr. Neuman’s strongest critics include members of the disabled community, for they strongly believe that the hospital staff had no right to decide whether or not to let the baby die. In response, Dr. Neuman stated that he recognizes that this is an extremely controversial issue for the disabled community due to their own experiences. By Tiago Palmisano

Edited by Bryce Harlan Malaria is one of the most well known parasitic diseases in the modern world. Much of its fame (or infamy, to be precise) is driven by the incredible access to medical knowledge that comes with the age of smartphones. World Malaria Day was established in 2007, and the disease has even managed to inspire a British-American TV film, Mary and Martha. Yet, a more probable reason why malaria is so well known is the miserable fact that for centuries it has been one of the most prolific killers known to man. According to the World Malaria Report 2013 released by the WHO (World Health Organization), there were approximately 207 million reported cases of malaria in 2012, of which over 600,000 were fatal. The disease is primarily associated with mosquitoes, but these insects are only the vehicles of transmission. Malaria is caused by any of five species of the single-celled parasite known as Plasmodium, which most often enter a human’s bloodstream via the bite of a female mosquito. The parasitic organisms invade and destroy liver cells and red blood cells. Infections typically occur in sub-Saharan Africa and other equatorial regions. Malaria has been a part of human society since antiquity – references to the disease can be traced back to ancient Greek and Chinese writings. But despite its history, the biggest step to fighting malaria didn’t occur until about forty years ago. In the late 1960s, the prevalence of malaria provoked China to initiate a national research project to develop new treatments for the disease. DDT and Chloroquine, chemicals developed by scientists, were the treatments of choice at the time and were both losing their effectiveness due to increased resistance. One team working on this Chinese national project was led by Youyou Tu. Forgoing the idea of synthesizing a new drug, Youyou and her team investigated ancient Chinese herbal recipes and eventually honed in on a plant known as Artemesia annea, or sweet wormwood. Youyou isolated a compound from the plant, artemisinin (qinghaosu in Chinese), which she discovered had remarkable anti-malaria effects. In order to find an effective means of obtaining artemisinin from the plant, the team once again enlisted the help of ancient writings. Inspired by The Handbook of Prescriptions for Emergency Treatments(a Chinese medical text from 340 BC), Youyou experimented with a cold extraction process that ultimately allowed her to successfully isolate the drug on October 4th, 1972. In subsequent experiments, artemisinin proved to be extremely effective against strains of malaria in mice and monkeys. Youyou writes about this “breakthrough in the discovery of artemisinin” in her 2011 commentary published in Nature Medicine. In the years that followed, artemisinin became the go-to drug for the treatment of malaria. Youyou Tu was awarded part of the 2015 Nobel Prize in Medicine for her work. Indeed, the contribution of artemisinin has managed to alleviate significant amounts of suffering worldwide. Between 2000 and 2013, the malaria treatment method centered on artemisinin resulted in a decrease in the international mortality rate by forty-seven percent. Despite progress, the parasitic disease continues to be a major issue in modern society, demonstrated by the hundreds of thousands of fatal cases in 2012. An important lesson from Youyou’s research is the potential of looking towards antiquity for medical inspiration. Even in the wave of modern pharmaceutical technology, the answers to some of our most pressing physiological problems may reside in a look towards the past. Written by Dimitri Leggas

Edited by Hsin-Pei Toh A few years ago, 23-year-old Kim Suozzi died of cancer. She and her boyfriend Josh Schisler had decided to have her brain cryogenically frozen in hopes of preserving the synaptic intricacies that helped to make up who she was. Suozzi and Schisler hoped that one day, some time when we better understand the human mind, her mind could be brought back in another form. This concept is called mind-substrate transfer (MST), commonly known as “mind-uploading.” Ray Kurzweil, director of engineering at Google, claims that our computational and technological capabilities are progressing at a rate that will allow for MST by 2045 and the replacement of our entire bodies with machines by 2100. Mind-uploading is the process of copying and transferring mental content from a brain to a computational device capable of further simulating intelligent processes via artificial neural networks. While not currently realizable, perhaps one of the most obvious reasons for pursuing this technology is the immortalization of an individual. One active area of research with this goal in mind is neural prosthetics. Historically, this involved providing simulated input via electrodes to regions of the brain that no longer function properly. Recent work has shown that neural prosthetics may also be used as a substitute in certain circuits of the brain essential to internal brain function. What is not clear is how to extend this technology to the entire brain or the central nervous system. Most likely, it would involve the gradual replacement of the brain by prosthetics that would emulate the brain they replaced. Two other approaches to MST exist that do not require the gradual addition of prosthetics. These are the ‘reconstruction from a scan’ method and ‘reconstruction from behavior’ method. The ‘reconstruction from a scan’ method involves using a scan of the brain, which may involve euthanasia, cryogenics, and taking a mold until more sophisticated high resolution scanning technologies exist, in order to record the neural and synaptic functions for later simulations. The ‘reconstruction from behavior’ approach is less invasive, and involves the gradual parameterization of a person in software form by observing the behaviors of an individual. Instead of gradually replacing a biological brain, these methods require the complete reconstruction of the mind. If scientists plan to reconstruct a person, a “self,” it’s important to ask what the self even is. Do you view youas a brain, as a body, or possibly as a soul? Are you some amalgamation of all these things? It is necessary to think about what is lost in the process of MST. It is important to ask if it is possible to maintain a continuous sense of identity between bodies. If a mind is transferred from a body to a non-biological machine, does the mind maintain its original identity? Kenneth Hayworth, a MST researcher who wants his own brain preserved for copying, said, “What do you consider to be ‘you’? Mind uploading is useless if this personal definition of ‘you’ is not successfully transferred.” I have a tendency to view “me” as my mind. But my family most likely recognizes me for my body, my physical attributes, not my mental content. If Suozzi comes to in 2045, will she feel like her old self? Perhaps, but will Schisler feel like he has his partner back? It’s not clear that MST can avoid these crises of identity, which may well outweigh any preservation of cognitive capabilities. Written by Julia Zeh

Edited by Ashley Koo Along the grassy ocean floors and the valleys of Yellowstone National Park there exists a landscape of fear. Although this phrase conjures up the image of a horror movie, the landscape of fear is really an ecological phenomenon involving fear’s relationship to natural predator-prey relationships. This important phenomenon is produced by apex, or top, predators, like sharks and wolves. When one member of an ecosystem is impacted, naturally the entire ecosystem feels its effects. These impacts take the form of something called a trophic cascade. The word ‘trophic’ refers to a process related to feeding or nutrition, so a trophic cascade is a trickle down, or up, effect on a food chain. Changes in the population size of a certain species can cause an increase or decrease in another population of another species because of the connections created between different groups of organisms by their predator-prey relationships. Trophic cascades are an indirect result of predation, so even top predators can indirectly influence plants. Each group of organisms is kept in check by every other group with which it comes into contact, either directly or indirectly. A landscape of fear is a trophic cascade. When it occurs, the mere presence of top predators can influence animal behavior and thus entire ecosystem dynamics. This landscape of fear phenomenon has been witnessed in Yellowstone National Park with the return of the wolves. In the 1920s, the last of the Yellowstone wolf population was completely eliminated. In the 1990s, however, the decision was made to reintroduce wolves to Yellowstone. With the wolves back in the park, it was believed that the entire ecosystem would be healthier. By the time the wolves returned to Yellowstone, the plant populations had been drastically decreased. A trophic cascade had occurred. With the loss of the wolves, the elk population – the wolves’ primary prey – had exploded. In consequence to the sudden increase in elk, plant populations suffered a huge blow. This impacted populations of aspen trees in particular, which the elk would graze on before the trees could grow particularly tall. Once the wolves returned, however, the elk population was back in check and the aspen population also normalized. But direct predation wasn’t the only impact of the wolves; wolves also changed the behavior of the elk. The elk were spending more time in flat areas to graze and far less time in riskier areas that would be harder to escape if wolves showed up. Trees and shrubs could grow considerably larger in these places because the elk that would normally consume them before they could grow very tall were now avoiding the areas. Entire ecosystems that rely on larger plants were revived in the areas the elk considered risky, all because of an atmosphere of fear induced by the presence of wolves in the area. Interestingly, a landscape of fear is also produced by tiger sharks and can cause a change in behavior of dugongs. Dugongs and tiger sharks live together off the coast of Australia, exhibiting a typical predator-prey relationship. Dugongs are large marine mammals, the Australian and East Asian equivalent of the American manatee, and are preyed on by large tiger sharks. Affectionately nicknamed sea cows, they graze on, and thus seriously impact the populations of, seagrass that grows along the ocean floor. Just like the elk, the dugongs adapt their behavior to the presence of predators, taking less risks in where they feed, and thus impacting the plant populations of the ecosystem. When sharks are present, dugongs overuse the seagrass in deeper areas which have less seagrass for them to consume. The potential for food is higher in shallow areas, but so is the potential for a shark attack. This is particularly important in determining how seagrass population structure may be drastically impacted, though indirectly, by the tiger shark population. As we observe more of the relationships between and behaviors of organisms, we continue deciphering the interrelatedness of the natural world. Whole ecosystems are influenced through trophic cascades, which can occur through fear induced behavioral changes. The landscape of fear is not just a fascinating aspect of animal behavior and ecology (and a name that would excite anybody, regardless of their science background), it also has enormously important conservation implications. The landscape of fear, along with other ecological phenomena, demonstrates the multitude of connections among different forms of life on earth. The ecological phenomenon reminds us just how cautious we as humans need to be as the ones who have huge impacts on other organisms. If humans eliminate or even just displace or decrease one small population of species lower on the food chain, the effects on countless other organisms can be catastrophic. Humans have already had a fatal impact on tiger sharks, which are threatened, and wolves, which are endangered. The predators themselves are at risk, and thus entire ecosystems in which the predators have important influences on population sizes and behaviors are too. Each living thing is an important component of a bigger web that spans the globe, and damage to one part damages the whole. By Yameng Zhang

Edited by Thomas Luh Last summer, I stumbled upon Bernard Mandeville’s The Fable of the Bees during my time learning how to keep bees in the mountains of Yunnan Province. The poem was not quite the introduction to beekeeping I was looking for, but it is an allegory relating the actions in the beehive to economics and social human behavior. Mandeville discusses how responsibilities and social behavior lead bees toward prosperity. From bees, we can learn a lot. No one can deny that they are far more than just a source of honey; they are intelligent social animals. Covered from head to toe on the beekeeping farm, I found myself unable to stop thinking about Mandeville’s poem: “A Spacious Hive well stock'd with Bees, That lived in Luxury and Ease; And yet as fam'd for Laws and Arms, As yielding large and early Swarms; Was counted the great Nursery Of Sciences and Industry. No Bees had better Government, More Fickleness, or less Content.” This exactly seemed to be the prosperous scene in the beehives I was tending. Bees have built their unique but highly ordered society over thousands of years. In E.O. Wilson’s book, The Social Conquest of Earth, he says that bees cannot evolve to human intelligence because of their small brains, but he implies that these delicate creatures possess incredible intellectual ability for their size. What is the nature of their intellect? Scientists, I learned, still do not know. We cannot even decipher bees’ language. Does the possibility exist that the busy colony of bees might not act from instinct, but from an intellect, one that can even rival that generated from the sophisticated human brains? This thought is disturbing for many people, because if it were true, we would have to embrace the fact that one third of our agriculture is controlled by creatures as clever, or to say as crafty, as us. We will not, however, face immediate “threat” from bees, at least not anymore. Since 2006, bees have declined 50% in number in Europe and the United States. Scientists use the term Colony Collapse Disorder (CCD) to explain this phenomenon. (In fact, scientists just use the term to name the phenomenon, but have not succeeded at explaining the phenomenon yet.) I was concerned at heart. The extinction of bees would mean a world without eighty percent of its current crop production: imagine meals without cucumbers, potatoes, apples, berries, almonds, or even coffee. A decline of bees could, in turn, result in a loss of biodiversity as well, which would cause negative ripple effects throughout our world. After three weeks as a beekeeper, I began to understand these creatures better. I understood the entire process of honey production—from tending beehives, to exacting honey from combs and packing the purified honey into bottles. The first time I extracted honey from beehives, I caught a drone bee and put it on the palm of my hand. While it zoomed curiously, without direction, on my skin, I felt a magnetic connection. I truly understood what Mark Winne, an expert in food security, refers to as the “primal unity between humanity and nature.” Through this experience, I gradually came to understand some of the causes of CCD. The growth of industrialized agriculture is a significant one. We prevent birds from consuming pests, and use pesticide instead; we do not care about the weather, and plant vegetables and fruits in greenhouses; we do not consider the creation of a bio-system, and conduct monoculture on a huge scale. Our new production system removes the natural aspect from the growth process, including the role of bees (considering the artificial pollination). If we alienate nature, there will be consequences. Would the scene in The Fable of the Beeshappen? In the text, Mandeville does not mentions why the bees in an abundant society suddenly change their behavior, lose interest in honey production and instead “all the Rogues cry'd brazenly, Good Gods, had we but Honesty”. Could I say that the neonicotinoid in pesticides interferes with bees’ nervous systems, causing their brains to work incorrectly? My passion for bees derives in part not just from my admiration for this species, but from the guilt for what human beings have done to nature. Is it too late? Will we be able to prevent the extinction of bees and their continued role in organic agriculture? Most importantly, if bees stand up and embrace their extinction, what is next for us? |

Categories

All

Archives

April 2024

|