|

By Anna Christou

Physicians and scientists have been working to develop cancer therapies that are more personalized and that also minimize damage to healthy tissue. For example, researchers have recently developed strategies that either target the genetic mutation that causes the cancer or that harness the immune system’s natural killing ability in order to destroy tumor cells. Notably, embolization therapy, which consists of blocking blood flow to the tumor, has emerged as a novel treatment that is particularly useful for combating liver cancer. According to the American Cancer Society, the incidence of liver cancer, as well as its death rate, has increased dramatically in recent years. Specifically, it was projected that 42,200 new cases would be diagnosed in the United States in 2018 and that 30,200 people would die from this cancer. Liver cancer is especially difficult to treat with surgery because there are many blood vessels and bile ducts in the liver that make it risky. Therefore, embolization therapy—a minimally-invasive procedure performed by interventional radiologists to block blood flow to the tumor—offers a promising alternative to surgery. By preventing blood from reaching the tumor, embolization prevents the tumor from receiving the oxygen and nutrients that are necessary for its survival and growth. In addition to targeting the tumor cells more effectively than surgery, embolization therapy is also less invasive and less harmful to the surrounding blood vessels and bile ducts in the liver. This therapy also has several other advantages, including faster recovery time, no need for general anesthesia, no scarring, and lower risk of infection. While radiation therapy—which uses high energy waves to kill large numbers of cancerous cells while largely preserving the organ in question—is still an alternative to surgery, radiation damages the surrounding tissue, once again making embolization the best option. In order to block blood flow to the tumor, interventional radiologists, the physicians who perform embolization procedures, inject beads into the bloodstream that travel to the site of the cancer. The beads, which can be made up of a variety of materials such as gelatin, then physically block the artery, stopping the blood flow to the tumor. A challenge to this therapy is making sure to only block blood flow to the tumor, while leaving the blood supplied to healthy tissue intact. However, the anatomy of the liver circumvents this challenge and makes this therapy particularly advantageous for treating liver cancer. The liver is unique in that it is connected to two sources of blood. First, like other organs, the liver receives oxygenated blood from the heart through the hepatic artery. Second, the liver also receives blood directly from the digestive system through the portal vein so that it can process toxins that were ingested and passed through the digestive system. In this case, the blood supply to a certain liver cell depends on whether the cell is normal or cancerous; healthy cells receive blood from the portal vein whereas cancerous cells receive blood from the hepatic artery. As a result, in embolization therapy, only the blood supply from the hepatic artery is blocked, thereby reducing the blood—and consequently the oxygen and nutrients necessary for survival—of cancer cells, while leaving healthy cells intact. In addition to blocking blood flow to the tumor, embolization beads that contain added chemotherapy agents can further block and kill tumor cells through a technique called chemoembolization. By interfering with cell division, chemotherapy not only halts the growth of cancer cells but also stresses them to induce cell death. In particular, the chemotherapy agent doxorubicin, or adriamycin, is most often used along with embolization. This drug blocks topoisomerase 2, which is an enzyme that ensures that the two daughter cells resulting from cell division each contain the original DNA of the parent cell. As a result, by preventing DNA from being replicated, cell division is disrupted, halting the growth of the tumor. Delivering chemotherapy agents to the cancer cells through embolization targets the tumor cells more accurately to minimize the side effects that usually accompany chemotherapy, such as hair loss and nausea. Recently, chemoembolization has been taken to a new level with the addition of radiopaque beads, which allow for more precise and targeted treatments. A radiopaque substance blocks radiation, rather than letting it pass through, so it can be seen on x-rays. For example, bone, unlike air or fat, is radiopaque, which is why bone is visible as a white structure on an x-ray. Scientists create these beads by adding a radiopaque molecule to a gel such that the resultant bead can then block radiation. As a result, when these radiopaque beads, which could also contain chemotherapy as previously mentioned, are injected into the bloodstream, interventional radiologists can track the location of the bead through fluoroscopy, a type of x-ray that relays real-time moving images of the body. Therefore, rather than just deliver the chemotherapy-filled beads to the patient without knowing whether they are properly placed or not, doctors can instead view the position of radiopaque beads with x-ray images. This is especially important since blood vessel size and position may vary between patients, so the placement of the beads may not be the same for all people. As a result, because physicians can gain an inside look into the body and track the location of the beads, they can ensure that the bead is properly positioned and adjust it, if necessary, to provide a more targeted treatment to the specific patient. The Federal Drug Administration approved the use of radiopaque chemotherapy-filled beads for embolization procedures in 2015, and since then, hospitals have been conducting clinical trials to fine-tune the procedure, such as to determine the optimal length and amount of chemotherapy. Embolization therapy is a novel treatment that has emerged to target cancer cells effectively and precisely, while also minimizing damage to the surrounding healthy cells. Hopefully, this development for liver cancer treatment will pave the way for similar advancements in other types of cancer treatment.

0 Comments

By Kyle Warner

The Intergovernmental Panel on Climate Change’s (IPCC) recent report makes it clear that if we are to avoid the worst impacts of global warming, we need to make drastic industrial-scale changes now. The report not only confirms the need for a reduction in greenhouse gas emissions, but also highlights the importance of pursuing carbon capture and storage technologies to undo the damage that has already been done. In order to avoid an increase of global temperatures to 1.5 degrees Celsius above pre-industrial levels, the benchmark limit set by the Paris Agreement, going carbon neutral will not be enough; carbon negative is the new mandatory. Fortunately, there are wide-ranging applications for capturing and using carbon, and with the budget bill passed by President Trump earlier this year that provides incentives for carbon capture, the development of these technologies is occurring at a rapid pace. In its most general form, carbon capture and storage refers to the process of removing waste carbon dioxide (CO2) from point sources and either storing or utilizing it in a way that prevents it from entering the atmosphere. The most logical application for carbon capture technology is use by the greatest carbon emitters—fossil fuel power plants. In these cases, there are three approaches that can be taken: post-combustion capture, pre-combustion capture, and oxy-fuel combustion. For pre-existing power plants, post-combustion carbon capture is the most viable option because it can be easily implemented on most smokestacks. This process separates CO2 from “flue gases,” which are released during the combustion of fossil fuels. A filter-like “scrubber” that acts as a solvent for CO2 is fitted to the smokestack, and once the scrubber becomes saturated it can be heated to release water vapor and leave behind concentrated liquid CO2. Post-combustion carbon capture effectively prevents 80-90% of a plant’s carbon emissions from entering the atmosphere, but there is still a lack of incentive for companies to pursue this technology. It costs roughly $60 per metric ton to capture and store carbon, whereas the budget bill only allocates $50 per metric ton. There are currently only seventeen large-scale post-combustion carbon capture plants in the world, which absorb less than 1% of global emissions, but rapid growth should be anticipated as the technology becomes cheaper and increasing pressure is placed on power plants by environmental lobbyists and government officials. For future power plants, carbon capture will be best achieved through pre-combustion capture and oxy-fuel combustion. During pre-combustion carbon capture, the fuel source is heated in pure oxygen prior to combustion, resulting in a mixture of carbon monoxide and hydrogen, which is then treated with a catalytic converter to produce CO2 and more hydrogen. Subsequently, a chemical compound called an amine is introduced that binds to the CO2, and these newly-formed molecules sink to the bottom of the mixture. The CO2 can then be easily separated from the fuel and the excess hydrogen can be used in alternative energy production or as a future fuel source that can, for example, power cars and heat homes. Although cheaper than post-combustion carbon capture, pre-combustion capture is not applicable to older pulverized coal power plants which unfortunately make up the majority of the current fossil fuel energy market. However, pre-combustion capture remains an efficient, cost-effective method of CO2 removal and should be a significant consideration in all future power plant designs. With oxy-fuel carbon capture, the fossil fuel is combusted in air heavily diluted with oxygen. This unique form of combustion releases flue gas consisting of high concentrations of CO2 and water vapor, which can then be easily separated out by cooling and compressing the gas. While this is effective at preventing 90% of the CO2 released from entering the atmosphere, the cost of the oxygen required for combustion is still too high for large-scale applications. After the CO2 has been captured, it needs to be either transported to storage or reallocated for commercial use. Methods of transport follow the same traditional means as oil or natural gas (road tankers, ships, and pipelines), but each project comes with its own considerations, and an appropriate method of transport for CO2 should be chosen subject to planning and health and safety regulations. Large commercial networks of reliable CO2 pipelines have been in place for over thirty years, but there remains significant potential for development of local and regional pipeline infrastructure to lower the cost of carbon capture altogether. Once transported, CO2 is most efficiently stored in porous geological formations several kilometers beneath the Earth’s surface. Examples of acceptable sites include old oil and gas fields and deep saline aquifers. These sites subject the CO2 to high pressures and temperatures that allow it to remain in the liquid phase and prevent ease of escape. Once injected into the ground, the CO2 rises until it becomes trapped under an impermeable layer of rock. Over time, this CO2 will either dissolve into the surrounding salt water and sink to the bottom of the storage site, or it will chemically and irreversibly bind to the surrounding rock. Although most of the CO2 captured today is stored underground, there are several promising applications for commercial use as well. One of the most encouraging commercial applications for carbon capture is the use of carbon fiber in architecture and building materials. CO2 captured from the air can be used to directly produce carbon fiber which is great for structural support as it is lighter than steel, five times stronger, and twice as stiff. As of now, carbon fiber production from captured CO2 has only been used in small-scale projects and requires more research in order to decrease costs enough for large-scale implementation. This is a potentially massive future market, however, that could significantly increase incentives for pulling carbon from the atmosphere and should thus demand significant investment. Other promising applications for usage of captured carbon include the sale of the liquid CO2 required for beverage carbonation to soda companies, the production of carbon neutral synthetic fuels identical to gasoline, the use of degradable plastics made from CO2, and the incorporation of CO2 into desalination plants to stabilize pH levels. As the IPCC report states, we must act now to stabilize the climate and prevent catastrophic weather events, sea level rise, and ocean acidification. It is vital that carbon capture becomes more widespread and applications such as these continue to be developed if we are to commence carbon negativity. We have all of the capabilities necessary to achieve this goal, but if we do not act urgently, we will reap the consequences for centuries to come. By Hari Nanthakumar

In the common and just slightly overused film representation of cavemen, our ancestors gaze at the bright, fluttering, and stupidly majestic light of fire with expressions of varying combinations of amazement, fear, and confusion, and all with an infinite curiosity that often leads to Hollywood’s idea of a well-thought-out joke—charred hair and soot-covered skin. Having higher intelligence and sophistication (or so we think), we might laugh at our foolish predecessors, who were amazed and befuddled by something as ordinary and simple as light. The closest we seem to get to that same feeling of awe in the face of light nowadays is staring at the bright lights of Butler Library late at night with sleep-drunk and beautifully clueless minds. However, a change is brewing. Recent developments in the use of light to revolutionize materials and products are beginning to bring out that same feeling of exhilaration and awe that our ancestors felt so many years ago. Just last year, Adidas, together with the startup Carbon, released the Futurecraft 4D, a sneaker manufactured through Digital Light Synthesis (DLS). It seems a little wild, but this essentially means that the sneaker was carved out by light. In DLS, an ultraviolet light is used to harden a photocurable polymer resin, a liquid solution that is reactive to light, within minutes. By projecting an image of the shoe onto the mixture, thereby controlling which parts of the resin harden, the light very quickly chisels out the structure of the shoe, leaving its structure surrounded by a solution of uncured resin. The structure is then baked, leaving the fully-functional prototype of a shoe. This is the first time that such a method has been used to manufacture shoes. Previously, three-dimensional printing had been used to create certain components of footwear, but the finished product still required more traditional methods such as molding, which can take as long as a month. The necessary additional processes severely extended the time needed to advance new innovations and versions. DLS changes this. Digital light allows the creation of several new, specially tailored versions within a single day, advancing the landscape of the footwear manufacturing process for years to come. The incredible flexibility in changing the projections of light that can be produced gives manufacturers the unique ability to easily influence the architecture of the shoe. It opens up a future in which shoes are made to beautifully and perfectly fit everyone’s feet and to suit their specific tasks—from running to daily life—with the entire process of design-to-product lasting a matter of minutes. And as the process becomes more common and more cost-efficient, footwear made out of light will be everywhere. This is not the first revolutionary product to come out of simple light. In 2015, Boeing, in partnership with HRL Laboratories, revealed the lightest metal in the world, created using a similar light-based manufacturing process. Using a UV light array projecting ordered rays into a polymer resin, Boeing and HRL created a micro-lattice structure that was electroplated with nickel. The polymer was then dissolved away with acid, revealing an ultralight, hollow metallic microlattice that was 99.99% air, lightweight enough to sit comfortably on a dandelion. However, this metal is not just any weak gimp. The lattice structure maintains the metal’s strength, allowing it strong elasticity and energy-absorption properties. Because of its ultralight and strong nature, the nickel micro-lattice can be incorporated in next-generation aircraft, making components that create the potential for large bounds in the search for fuel and waste efficiency. The uses of the metal do not simply stop at creating ultralight and strong structural components. Applications by HRL of the micro-lattice’s energy absorption have been extended to use in football helmets for energy dissipation in collisions, providing progress on the issue of concussions, which have been threatening the game of football since people have become increasingly wary of the dangers of chronic traumatic encephalopathy (CTE). Enough? No, the uses of these light-based structures have been further squeezed for even more applications. J. Bauer’s study of glassy carbon nanolattices demonstrated that pyrolysis can burn these structures into nanolattices that approach strengths that haven’t been seen before. Using light-based methods to create these wonderful, little structures opens up the unimaginable ability to influence the structure’s architecture and nature for specific and tailored purposes. Creation through light will soon influence the development of aircraft, football helmets, and shoes with the possibilities being open to much, much more. It is just beginning. We will look aghast, amazed, confused, and a little overjoyed at the transformations caused by light-based manufacturing processes. Through it all, we will let our inner cavemen out, and be proud of it as we gaze at the true magnificence of light. Hari Nanthakumar is a freshman in SEAS hoping to study materials science & engineering. A staff journalist for CSR, he enjoys spending his time watching random Netflix shows and occasionally venturing out into NYC. By Naviya Makhija

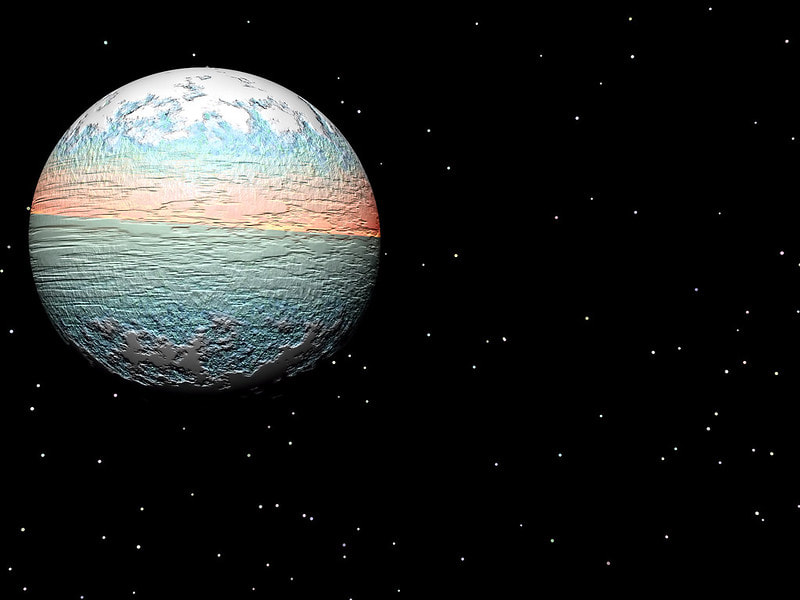

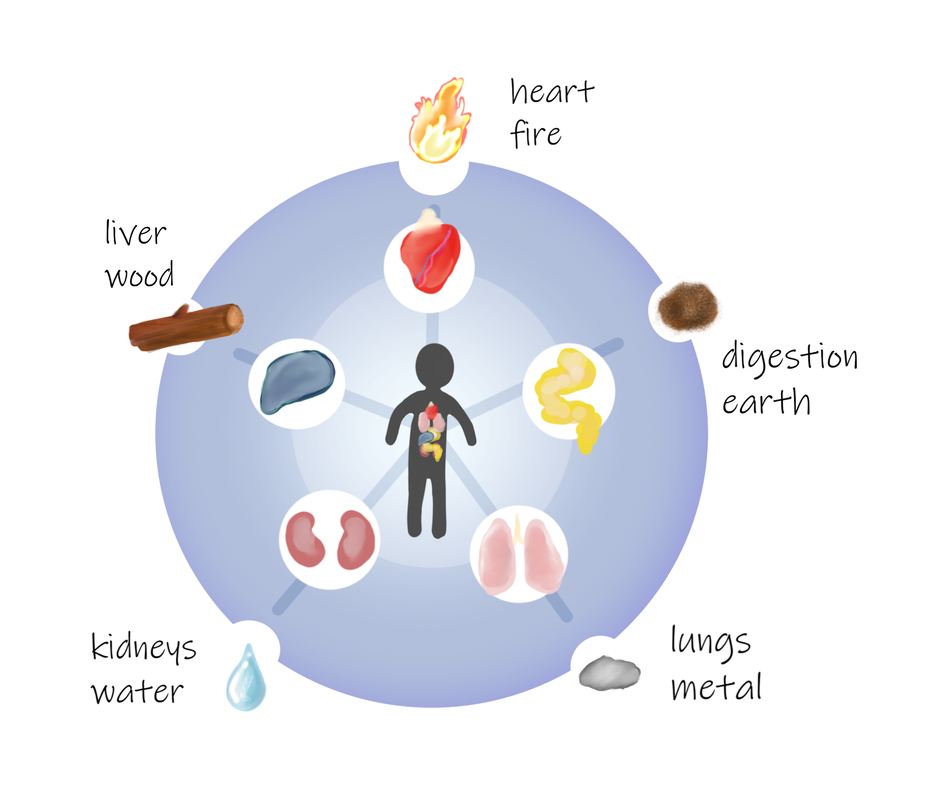

While the Internet remains fixated on Pluto’s status as our solar system’s ninth planetary member, another may soon contend for the position. Initially named Planet X, ‘Planet 9’ is a theoretical planet that many believe to exist beyond the Kuiper belt. The belt, which surrounds Neptune’s orbit, is a conglomeration of icy structures that include asteroids, comets, and dwarf planets such as Pluto. The existence of Planet 9 was first hypothesized in 2014 and inspired by the discoveries of Sedna and 2012 VP113, both of which are dwarf planets also in the Kuiper belt. Not only did studies of their orbits not conform to the expected trajectories derived from the current model of the solar system, but they also posed questions that could not be answered by our current hypothesis of planetary development. The two orbit in an “intermediate” space between the Sun and the Oort cloud – a shell of icy objects that exists past the Kuiper belt – often referred to as the inner Oort cloud. This location is seemingly inexplicable, as it’s too far from the planets in our solar system for them to be the primary influencers and too close to the Sun for it to be attributed to the pull of the Oort objects. One possible explanation is the existence of Planet 9 near Sedna’s perihelion, which is the point of Sedna’s orbit that is closest to the Sun. The Kuiper belt itself is evidence that Planet 9 exists. Some simulations have shown that one possibility for Planet 9 is that it once existed between Uranus and Neptune. As the planets moved outward, the theoretical planet’s gravity trapped the bodies in the Kuiper belt causing them to coalesce into the ‘groove-like’ structure that now exists. The 2015 discovery of a third dwarf planet, nicknamed ‘The Goblin,’ strongly corroborated the previous discoveries of Sedna and 2012 VP113, and it also points toward the existence of a theoretical planet such as Planet 9. In fact, The Goblin is the most promising of the fourteen similar dwarf planets discovered thus far, as its large orbit allows us to better single out and study its influencing factors. The Goblin’s orbit is seemingly unaffected by any of the four planets residing in the outer Solar System – Jupiter, Saturn, Uranus and Neptune – because it doesn’t pass close enough to any of them. Its isolated path implies that there must be something else – perhaps a large body such as Planet 9 – that is influencing it. The orbits of these three dwarf planets are similar in longitude of perihelion, which describes the elongated part of their respective elliptical orbits. Their longitudes of perihelion are incidentally consistent with the predicted gravitational force that a body such as Planet 9 would exert. Computer simulations modeling the birth and growth of our solar system are now being conducted to substantiate these claims, many of which are helping to uncover a surprising history. Of the hundreds of simulations ran that excluded Planet 9, only one percent yielded a model of the solar system that is somewhat similar to what we observe today. These results indicate the large possibility that there are elements that currently exist or have existed that elude us and have influenced the formation of our solar system without our knowledge. Within the other 99 percent of trials, there are key discrepancies with our known solar system, with some as large as the absence of essential players such as Uranus or Neptune. According to the simulations, they were expelled from the solar system as a result of Jupiter’s gravitational field. Given that Uranus and Neptune definitely exist, new research is attempting to account for potential bodies, such as Planet 9, that could change our current understanding of solar system evolution. These trials yielded results that conformed more to the system observed today. From this and similar statistical analyses, it’s estimated that the speculated planet has ten times the mass of Earth and an orbit between 500 and 1000AU, which suggests that a year on Planet 9 could be almost 20,000 times as long as that of our own. We might be tempted to believe that, given its size, it would be easy to detect Planet 9. However, its aphelion, which is the furthest point of a planet’s orbit from the sun, far exceeds our current methods of detection. As a planet gets further from the Sun, its brightness decreases by the inverse square law, making it difficult to observe. This leads many to be skeptical of the presence of Planet 9. They claim that conclusions are being drawn from a small sample, and they highlight the possibility that the altered orbits of 2012 VP113, Sedna, and 2015 TG387 or even the Kuiper belt’s unique structure are simply a result of the cumulative gravity of the smaller objects surrounding them. Nevertheless, evidence supporting the existence of Planet 9 is on the rise, and perhaps soon we might see Pluto stripped of its status as the honorary ninth member of our solar system. The Concourse of Traditional Chinese Medicine and Western MedicineBy Sirena Khanna Traditional Chinese Medicine (TCM) is an ancient method of healing founded upon the idea of Qi. Qi, written as 气 and pronounced like the “chi” in “chia seeds,” means energy in Chinese. In English, the word “energy” derives from words associated with force and vigor, but anyone who has taken general chemistry or physics knows that the concept of energy has much deeper underpinnings in nature itself. Energy simultaneously builds and destroys the universe; from the microscopic interactions between atoms in a water molecule, to the giant nuclear explosions that rocked the end of World War II, energy determines the order of life— for good or bad. In Chinese, the word for energy captures its fundamental role in everyday life. The character Qi (气) appears in the words for “gas” (气体), “air” (空气), “odor” (气味), and “weather” (天气), among many others. The character 气 is written using the “air/gas” radical, so the idea of a disseminating energy is embedded in the way it is written, too. The deeply-rooted and rich connotations of Qi elevate the idea of energy in the Chinese language to something more accurately translated as vital energy or life energy. Similarly to the matter-energy construct in physics, Qi in Chinese medicine explains what binds the universe together. Qi connects every dimension of life: physical, mental, and spiritual; as such, TCM practices focus on promoting and maintaining the flow of Qi in a person’s body, mind, and spirit. Being able to practice TCM relies on an understanding of Qi, and since there are many ways to qualify this energy, TCM practitioners need to be well-versed in recognizing its different aspects. The overarching classification system is known as yin and yang (阴阳); yin is associated with being cold, feminine, and light, while yang is associated with being hot, masculine, and heavy. Good health, according to yin and yang, is a balance between these complementary forces.  While yin and yang forms the basis of TCM and Chinese philosophy, this overarching dynamic can be further broken down into the Theory of Five Elements, in which certain elements represent yin and others embody yang. This theory also attributes a Qi to each of the five vital organs; for example, there are a Liver-Qi, a Kidney-Qi, and a Heart-Qi. Each organ-Qi is associated with one of the five elements: wood, fire, earth, metal, and water. According to yin-yang and the Theory of Five Elements, illnesses are a direct result of an imbalance in or excess of Qi. For example, depression is most commonly associated with an imbalance in Liver-Qi. When a patient seems depressed, a TCM practitioner might address their Liver-Qi stagnation through acupuncture and/or a prescription of herbal remedies. TCM texts, like Western Medicine textbooks, house a vast amount of information that help the practitioner devise a proper treatment. Some of these texts are thousands of years old, such as the 2,200 year old Huang Di Nei Jing. Modern TCM texts compile empirical evidence not only from ancient TCM texts like Huang Di Nei Jing but also from modern TCM practices. The Five Flavors Theory summarizes centuries of empirically determined herbal remedies. The theory classifies each herb under five different flavors and a temperature, either cold or hot. There are five tastes— sweet, salty, sour, bitter, and acrid (spicy)—each of which corresponds to an element in the Theory of Five Elements. Imbalances in Liver-Qi, for example, are treated with sour-tasting and cooling herbs because these herbs have relaxing and fluid-retaining properties. TCM posits that plants such as Aconitum (commonly known as aconite or monkshood) fall under this criteria, so Aconitum allegedly nourishes the liver and helps blood circulation. Altogether, yin and yang and the Theory of Five Elements are used to classify the different qualities of Qi, and in doing so, these systems form the cornerstones of diagnosing and treating illnesses within TCM. To those brought up with Western Medicine (WM), the TCM system of diagnosis and treatment seems radically different. Is taste really a good indicator of medicinal effect? Does the TCM approach to Qi miss the mark completely? The relatively recent emphasis on evidence-based research has spurred studies into the efficacy of TCM. Although much of this research is in its infancy, the preliminary results show why the thousands years-old system of TCM works on a physiological to molecular level. In order to address Liver-Qi stagnation, researchers at the Beijing University of Chinese Medicine created a rat model of depression to study the biological basis of Liver-Qi dysfunction. In the study, the researchers identified three genes in the liver associated with depression; they also proved that treatment with the Chinese herb Si Ni Tang (scientifically known as Aconitum) helped improve depression-related behaviors in the rats. The specific mechanisms that alter the expression of these depression genes in the liver were not revealed, but, at the very least, this study confirms the relationship between liver dysfunction and depression. Another common area of interest is the role of Chinese herbs in treating diseases like diabetes and diseases of the nervous system, such as neuropathy. Neuropathy is a broad category of disorders caused by the degeneration of the nervous system. Peripheral neuropathy (PN), in particular, is a condition that results from damage to the peripheral nervous system. PN is a common complication of diabetes mellitus (DM). 60 to 70 percent of patients with diabetes also have neuropathy, a condition specifically referred to as diabetic peripheral neuropathy (DPN). In America, around 20 million people have DPN, and although the prevalence of DPN has not been measured in China, around 114 million people there have DM.  Traditional Chinese Medicine (TCM) uses both acupuncture and herbal remedies to treat PN disorders such as DPN. In 2012, researchers at the Peking Union Medical College Hospital verified the use of Astragalus, Salvia, and yam in such treatment. In their study, they studied the effects of these herbs on Schwann cell myelination and neurotrophic factors, which are important players in maintaining and regenerating neurons. Although the exact mechanisms of Astragalus, Salvia, and yam on the nervous system are unknown, the study nonetheless demonstrated that these Chinese medicines promote nerve repair and regeneration. Slowly but surely, evidence-based research is shedding light on the scientific basis of what has been known through trial and error in TCM for thousands of years. A promising example of the concourse of TCM and WM appeared in a 2017 study on the use of acupuncture in hospital emergency rooms. The study, published in the Medical Journal of Australia, showed that acupuncture is an effective medication in treating acute pain for ankle sprains and lower back pain but that its analgesic effects take around an hour to manifest; however, once its full effects set in, the pain relief was comparable with that of pharmacotherapy. Although research into TCM is just beginning, the modernization of TCM is well underway. In the US, the National Center for Complementary and Alternative Medicine (also known as the National Center for Complementary and Integrative Health, or NCCIH) was established in 1998 “to define, through rigorous scientific investigation, the usefulness and safety of complementary and alternative medicine interventions and their roles in improving health and health care.” The center has a budget around $120 million US dollars, an investment that speaks to the United States’ interest in pursuing integrative medicine. On the international stage, in 2008, the World Health Organization (WHO) supported an international agreement called the Beijing Declaration that promotes the preservation of traditional medicine in national healthcare systems across the world. This declaration is representative of the global impetus towards integrative medicine. In 2016, China further propelled the shift toward evidence-based research into TCM by passing new legislation that expanded its funding. As Article 8 of the Law of the People's Republic of China on Traditional Chinese Medicine upholds, “The state supports TCM scientific research and technical development, encourages innovation in TCM science and technologies, shall popularize and apply TCM scientific and technological achievements, protect TCM intellectual property rights, and enhance TCM scientific and technical level.” Overall, the legislative push towards researching TCM in a scientific setting bodes well for its integration into WM. In a yin and yang fashion, TCM and WM balance each other’s extremes. The use of randomized clinical trials and biomedical research can bring TCM up to speed with WM modern standards, while TCM can teach WM the overarching, holistic approach of traditional medicine. An important application of this mutualistic relationship between TCM and WM is in pharmaceuticals. Pharmaceutical research often focuses on a reductionist method by singling out compounds from herbs to sell as drugs. However, the one-drug-one-disease philosophy is questionable. Current research suggests that herbal medicine, including those from TCM, has synergistic effects, so individual active ingredients work together to create a greater effect and should not be isolated and prescribed on their own. TCM can complement the rigid, sometimes narrow-minded views in WM with a fluid, personalized treatment. For example, a Western doctor might prescribe vitamin supplements to someone with neuropathy if the doctor thinks the disease is caused by a vitamin deficiency. In comparison, TCM practitioners treat the entire body with multiple herbal remedies in order to balance the flow of Qi. TCM’s holistic approach to disease explains why acupuncture is also a big part of treatment. Disease is a complex interaction of many biological systems, so the entire person— not just one type of molecule— should be treated.

The best of both worlds would be a combination of WM and TCM. Integrative medicine could yield the best diagnoses since it combines TCM pattern classifications alongside biomedical diagnoses. Many patients turn to TCM clinics for this reason: when WM had no cure, TCM was the next best option. Treating TCM as a last resort reveals the myopic attitude many people in the United States have toward alternative medicine. Yet, TCM might be able to treat certain diseases better than WM, and unfortunately patients only discover its virtue after going through everything WM has to offer. Moreover, TCM is rooted in preventative, non-invasive medicine, so the goal is to maintain balance in Qi before major diseases ensue. In this regard, TCM is not only more cost effective but also better for the patient’s health because it potentially prevents disease altogether. The main deterrent to TCM integration in WM is the lack of awareness and the stigma against Chinese medicine. Most insurance companies do not offer coverage for TCM acupuncture and herbal remedies due to safety and liability concerns; these concerns are mostly unfounded because licensed TCM practitioners must undergo rigorous training and obtain a formal license to practice medicine, just as WM doctors do, too. But, as research continues to support TCM practices and as patients turn to alternative medicine, demand could carve out a new space for TCM in the Western healthcare market. As it turns out, through alternative medicine, WM and TCM have the potential to complement each other and strike balance within the healthcare system, which at the moment could use a bit more yin and less yang. By Manasi Sharma

Intelligent, astute, and, in her own words, “greedy for life experiences,” Dr. Janna Levin is one of the most engaging and well-known scientists on campus. As a Professor of Physics and Astronomy at Barnard College and a recipient of the Tow Professor grant, Dr. Levin’s main research focuses are the early universe, chaos, and black holes. She is also the author of popular science novels such as How the Universe Got Its Spots: Diary of a Finite Time in a Finite Space (2002),A Madman Dreams of Turing Machines (2006), and most recently, Black Hole Blues and Other Songs from Outer Space (2016). Dr. Levin has also appeared on many shows, including this past January as a host on the Nova's award-winning episode “Black Hole Apocalypse.” Many of us on campus might also know her as a lecturer for the Astrophysics unit in Columbia College’s Frontiers of Science seminar. Dr. Levin sat down to chat with me earlier this year about topics ranging from her past work on universe topology to her scientific pursuits outside of research. In short, she says, “I’m particularly interested in general relativity—the suggestion that space and time warp and stretch in response to mass and energy. Everything I work on has to do with that: the Big Bang, the shape of space and time, black holes.” When I asked her about what she has been working on most recently, Dr. Levin excitedly introduced her favorite topic—black holes. “As of late, I’ve been more focused on black holes and part of that is because of this incredible discovery that won the Nobel Prize in 2017.” (She is referring, of course, to the monumental discovery of gravitational waves in February 2016, which were produced by the merger of two black holes millions of years ago.) “It’s weird to think about it,” she tells me, “but black holes are like the fundamental particle of gravity, almost like electrons. They are the wildest, most extreme objects in the universe.” Dr. Levin has done extensive work on the dynamics of black hole mergers and whether or not electromagnetic radiation is released during the mergers. The recent discovery of the neutron-star merger in 2017 really kickstarted Dr. Levin’s work on black holes, as they provide experimental counterparts to the theoretical background she works on. “It’s always good to work on something and then have it be observed in some manner,” she told me. Professor’s Levin’s work includes analysis of extra-spatial dimensions, which lie outside the normal three dimensions of length, width, and height. According to Dr. Levin, “extra-spatial dimensions might be topologically finite—you could imagine them being wrapped up like origami. In this case, all the dimensions in the universe should be finite. The question then arises of how the regular three got to be so big.” She went on to say that studying these smaller dimensions could give you an idea of whether the universe is finite or infinite. “It’s a question that might be unanswerable, and that’s one of the reasons that I wrote [How the Universe Got Its Spots] because I wasn’t sure if anyone could ever figure it out. I wanted to keep the idea alive.” When prompted about any future plans or collaborations, Dr. Levin told me that she teams up with other researchers from around the world. “I have collaborators in Georgia, Harvard, Oxford, et cetera, although admittedly, it’s hard to collaborate over long-distance,” she told me with a laugh. She also mentioned her current and future work with Columbia Professor Brian Greene, with whom she has done some “really good work on extra-spatial dimensions—specifically, how to combine string theory and cosmology.” With regard to future plans, she mentioned that she sits down every few years to reflect on what she has done so far and where she wants to go in the coming years. I was curious to know what her daily schedule looked like. She told me that she divides her time between these different pursuits and that she doesn’t have a set pattern because her time is largely dependent on her academic schedule. However, she is a strong proponent for the strategy of dividing tasks or committing to things in chunks. She tells me that, “I can’t fit everything in in one day, so I try to assign days to certain tasks—I have my writing days, my Barnard/Columbia days, and so on, and in those days, I’m absolutely dedicated to those individual tasks… I’ve noticed that that tends to work best.” In addition to her research, Professor Levin is currently teaching Barnard’s introductory physics sequence, as well as working on an upcoming book. She is also the Director of Sciences at Pioneer Works, a non-profit foundation that seeks to foster multidisciplinary creativity in the arts and sciences. Between her mind-boggling research, best-selling books, and on-campus lectures, Professor Levin’s status as an accessible campus figure belies the true breadth of her accomplishments. At the same time that she explains the basic tenets of general relativity to a room of tired first-years, she studies the multi-dimensional universe and the enigma of its black holes. Professor Levin is proof that in brilliance and approachability are not necessarily mutually exclusive, and that the beauty of science lies in its appeal to every level of expertise -- from bleary-eyed high school graduates to award-winning physics geniuses. |

Categories

All

Archives

April 2024

|