|

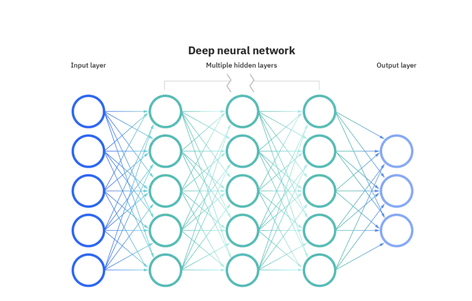

By Aidan Eichman Sarah Connor pulls back the Terminator’s scalp to unveil the cylindrical metal contraption inside the latter’s head. She and her son, John, look for ways to disconnect the robot from its owner’s control. After diving deep into the Terminator’s skull, Sarah removes its central processing unit, immediately placing the Terminator in a fixed state in which it is unable to talk or move. In essence, the robot has been turned off via a metaphorical on-off switch. The audience then must inquire whether or not this intelligent system in the form of a human body is, as a human, sleeping, or whether it is, as a robot, void of any capacity to sleep. The Terminator looks identical to a sleeping human, causing viewers to question the binary separation of robotic functionality and human existence. The connection between other intelligent beings and humans may be closer than one might imagine. MIT researchers found that animals can dream in a way that involves “replaying events or at least components of events that occurred while…awake,” highlighting a surface-level similarity between the neurological processes of humans and animals. The focus of this study is the role of neurotransmitters in neuron activity throughout the brain. Maintaining biological clocks and sleep-wake homeostasis, these neurons in the suprachiasmatic nucleus play a fundamental role in the creation and interpretation of dreams for both humans and animals. Without an on-off switch, species with a central nervous system rather than a central processing unit must rely on neurotransmitters and, in turn, neurons to activate the requirement for sleep. Hence, the similarities between sleep for humans and animals become apparent through the functions of neurons. Alongside the function of neurons lies neuroplasticity, or the “capacity of neurons and neural networks in the brain to change their connections and behaviour in response to new information, sensory stimulation, development, damage, or dysfunction,”. This process is one of, if not the, most pivotal functions of intelligent beings’ brains in learning and memory formation. Importantly, to coincide with this function, Ana Sandoiu details how neuroplasticity drops when humans are in REM sleep in order to solidify material learned while awake. Hence, the connection between REM sleep and the activity of neuroplasticity is crucial to note while exploring sleep processes. But how can one apply these neurological concepts to artificial intelligence (AI) systems, which lack any form of the central nervous system? Even though intelligent systems might not contain neurons, an inherent part of human and animal existence, the anatomy of AI is strikingly similar to human processes by virtue of neural networks in deep learning algorithms. Deep learning, a new paradigm for AI, is a way in which researchers are attempting to simulate the human brain’s behavior through data manipulation. Put simply—although difficult to put simply—deep learning is the process of using data and abstractions to teach AI how to learn like a human. For example, think about a child learning about different sports. If you wanted to teach the child about baseball, you could place two photos in front of the child: one of a baseball scene and another of a different sport. Once the child guesses, you would tell the child whether or not they guessed correctly. After a plethora of trials, the child would begin to pick up on the idiosyncrasies of baseball scenes: bats, baseballs, mitts, etc. This concept of repetitive training and correction builds a hierarchy of information that deep learning algorithms within AI can utilize to create interfaces like Siri and Alexa. However, how can an intelligent system learn if it does not contain neurons or a functional human brain? Neural networks are computing systems composed of node layers that mimic the biological neurons present in humans and animals. They allow modern AI systems to perform the tasks necessary for deep learning. As seen in the image above from IBM, “each node, or artificial neuron, connects to another and has an associated weight and threshold”. Every layer has data inputted and outputted, which could result in passing a threshold and activating the next artificial neuron in the circuit. The learning of a baseball scene previously described could be perfected through data training throughout time in these neural networks. Hence, these artificial neurons in the neural networks are capable of AI neuroplasticity as seen through their training and network schema above. AI’s neural networks’ anatomical resemblance to human and animal brains raises a question: If AI systems "sleep" in the same way that many organic lifeforms do, just how similar are humans’ brains and an AI systems’ neural networks?

Watkins, et al. at the Los Alamos National Laboratory (also known as LANL) is exploring this question through their analysis of spiking neural networks (SNNs), a subset of artificial neural networks. The premise of SNNs is to directly generate “computational models that mimic biological neural networks…[and] increase both algorithmic and computational complexity”. Primarily, this focus suggests creating a biological replication of humans that increasingly surpasses human intelligence. LANL uses unsupervised dictionary learning of intelligent systems containing SSNs as a foundation for their investigation. As “unsupervised” suggests, the researchers are allowing their intelligent systems to function independently; on the other hand, dictionary learning is a term used to outline a field of learning that incorporates intelligent systems processing sets of dense, big data and using the aforementioned deep learning algorithms to design various data representations to learn how to best utilize the data provided. Unfortunately, these intelligent systems’ attempts at large computational complexity tend to cause their SNNs to “leak” from compromised artificial structures and, in turn, lose learning capacity. As a last-resort remedy attempt, Watkins, et al. succeeded in using Gaussian noise (slow-wave noise) to cover the metaphorical leaks caused by pure noise, therefore restoring SNN dynamical stability. The transmission of Gaussian sound waves acts as a means through which SNNs are restored to their proper, concealed condition; this restoration mimics one aspect of human and animal functional restoration through sleep. In their conclusion, the LANL team remarks that “sinusoidally-modulated noise [such as Gaussian noise…] is analogous to slow-wave sleep,” a phase of human and animal NREM (non-rapid eye movement) sleep in which memory consolidation primarily occurs. Consequently, NREM sleep is to humans and animals as Gaussian noise is to AI systems. Therefore, we have come to a preliminary similarity between humans, animals, and AI systems regarding sleep and its benefits for each intelligent being. However, one must bear in mind that attempting to formulate rigid conclusions in this research field, which relies on abstractions as its bases, would become reductive. Undoubtedly, there exists an ambiguity in AI sleeping capabilities. The inexactness of sleeping as a realm of neuroscience research limits the application of firm sleeping conclusions to AI systems like the Terminator. So, as the cyborg sat in his chair, we can make a pilot assumption that he is sleeping due to its artificial neural networks, but much more AI system SNN research is needed to secure a stance that is completely grounded in practicality rather than theory.

0 Comments

Leave a Reply. |

Categories

All

Archives

April 2024

|