|

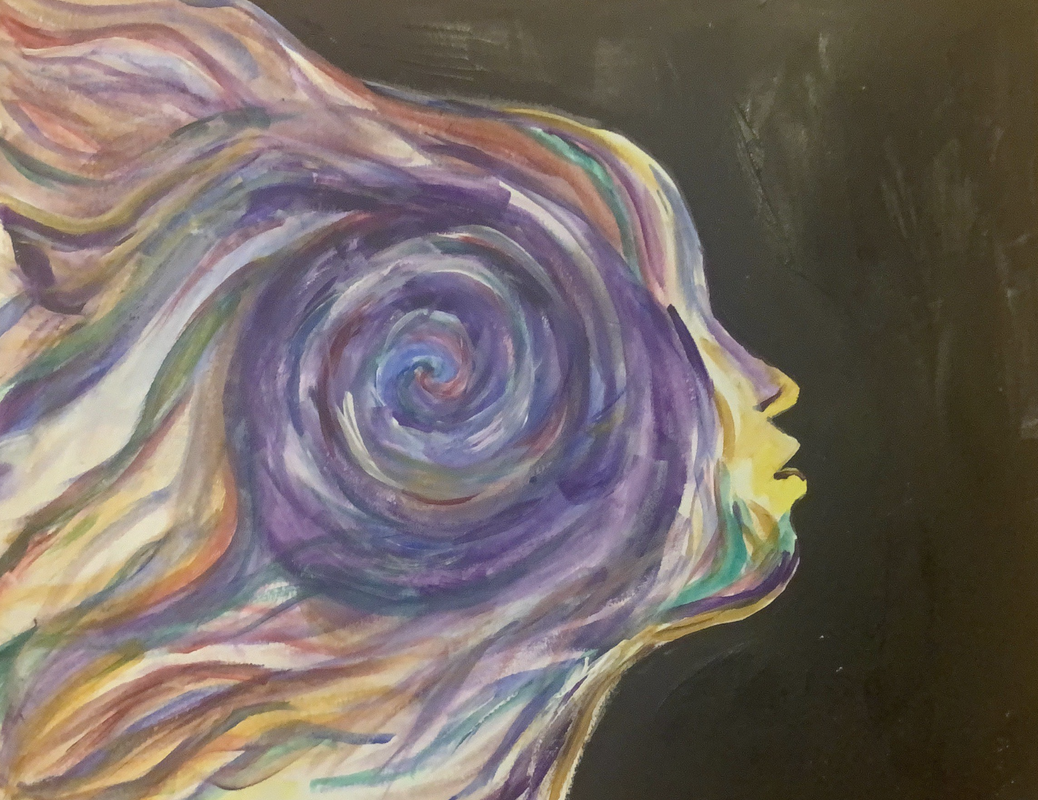

By Kevin Wang What is consciousness? Philosophers have tackled this question for hundreds of years, yet have always fallen short of reaching an objective conclusion. With simple technology and a limited understanding of the human brain, they had trouble grasping the workings of our minds. Many thought that our selves and our consciousness were ethereal—stemming from a magical dimension, rather than rooted in a physiological source. However, as technology has developed, neuroscientists have been able to develop a better understanding of how the human brain works. With this research has come a better understanding of the mind and an ability to tackle consciousness from a purely biological perspective. Many have created theories for consciousness, one such being Integrated Information Theory, or IIT. IIT is an attempt to build a theory of consciousness from the top down, rather than the bottom up. In other words, rather than philosophically theorizing about what consciousness is, it looks at consciousness in humans and attempts to create a list of qualities that define a conscious being. IIT begins by listing a series of axioms, or basic requirements for consciousness. These axioms are numerous and complex, but let’s begin by discussing two of the major ones. The first axiom is intrinsic existence—that is, consciousness exists, and your experiences are real. This seems blatantly obvious and doesn’t seem to prove anything special. But the axiom actually highlights an important fact about consciousness—we can be certain that we are conscious. This harkens back to the thinking of philosopher René Descartes, who coined the phrase “I think, therefore I am.” Descartes posited that we can question nearly any fact about the world—the sky is blue, the year is 2020, 2+2 = 4. This is because our senses may lie to us, and we may make any errors in logic. However, the only thing we cannot doubt is that we are thinking, for to deny that would be to think in and of itself. Thus, when we consider consciousness and experience, we cannot deny the fact that it exists. For without consciousness, we could not consider it in the first place. The second relevant axiom is that of integration—our experience cannot be partitioned, or separated into different parts. For example, consider that you look at a white cue ball. The experience of seeing a white cue ball cannot be the summation of the experience of seeing white and that of seeing a cue ball. In fact, it’s unclear what it would even mean to see a cue ball without any color. Rather, experience is integrated—any given experience is simply that, an experience, and cannot be divided into parts. From these, the theory develops certain postulates—more complex results that are derived from the axioms. One major postulate is that of cause-effect relationships in an integrated system. The different parts of our consciousness do not occur separately, but rather have causal power over each other, wherein different “parts” of our consciousness directly affect each other. As a result, we do not have multiple senses that are added up, but rather one unified experience. This is best explained by taking the example of the human body. At first, it does not appear to be truly integrated. We have different organs, from our eyes, ears, mouths, and noses, all of which process different senses separately. What you hear does not seem to have an effect on what you see. However, this hypothesis falls apart once we take a closer look at the brain, specifically the cerebral cortex, where sensory information is processed. Information from sensory organs arrives here in the form of signals from neurons. The way these signals are processed, though, is not separate. While there are different parts of the cerebral cortex for different senses—for example, the visual cortex, the auditory cortex—they do not operate separately. Rather, each sense has a direct effect on others: a cause-effect relationship. The exact method by which this occurs is not yet certain, but we can empirically verify this, by the fact that the summation of the senses has been measured to be non-linear. In other words, the information from each sense is not added together, but certain parts are exaggerated or diminished depending on the mix of signals that come in. Therefore, what we perceive is created by an integration of our senses, not simply by adding them all together. The developers of IIT have even found a way to measure the amount of “consciousness” of a system with a Φ value between 0 and 1. The calculations for this value are complex, but they essentially measure how well a system functions when taken apart. If, when taken apart, a system functions just as well as it did when put together, then it isn’t very integrated, and there will be a low Φ value. However, if the parts are all cause-effect, and are able to affect each other, then the way the system works when taken apart will be very different—leading to a high Φ value. This theory is revolutionary, not only for providing a biologically accurate theory of consciousness, but because of its implications for AI. If consciousness can be quantitatively measured, it seems likely that it could be constructed in machines. We could create machines that are increasingly advanced and have higher and higher levels of consciousness. In fact, there is no reason why the human experience would be the pinnacle of consciousness. If consciousness is a sliding scale, it appears that we could create a machine that somehow experiences a higher level of reality than we do. It is hard to picture what this looks like or even means, but the implications of IIT are impressive. We may soon be navigating a future where machines demonstrate human-like consciousness—in fact, they might even be beginning to do so. Most importantly, though, this philosophy demonstrates that our own consciousness is not a magical construct, but rather, the inner, complex workings of our brains.

1 Comment

Grant Castillou

10/26/2021 11:22:45 am

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with primary consciousness will probably have to come first.

Reply

Leave a Reply. |

Categories

All

Archives

April 2024

|