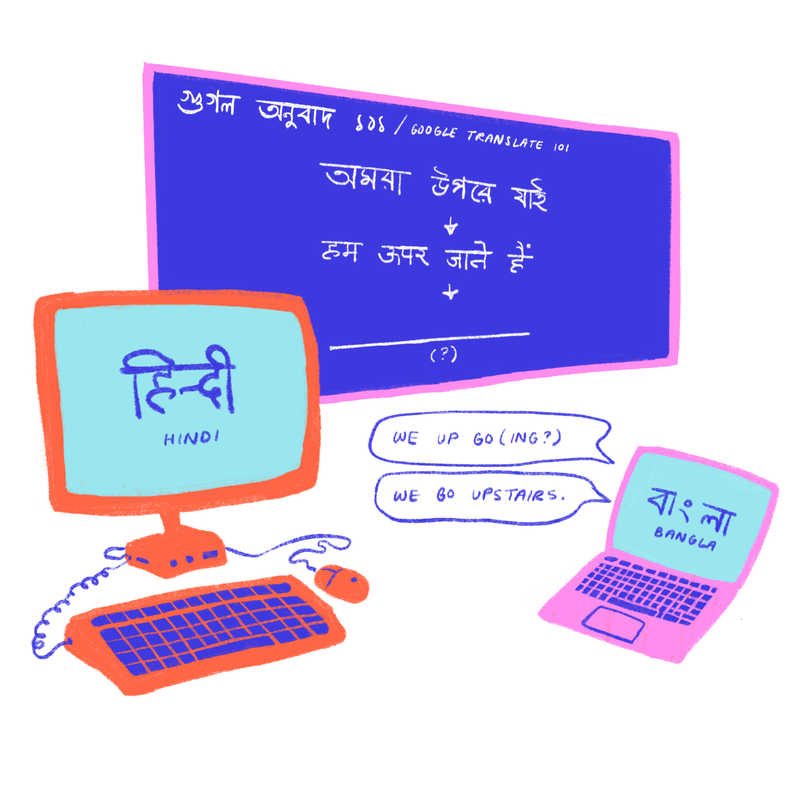

Illustrated by Sreoshi Sarkar By Eleanor Lin There are 7,000 languages spoken around the world, each one unique in the ways it encodes meaning. Translation is thus a challenging task for humans and machines alike. How do you find the right words and grammatical constructs to accurately transfer the sense of a message in the original language to a different one? Neural machine translation (NMT) takes a very different approach to solving this problem than a human translator would. NMT relies on showing a machine learning algorithm millions of examples of human-generated translations from one language to another, in order to teach it to translate on its own. For example, to train an algorithm to translate from English to German, the sentence "I read a book," paired with translations written by human translators fluent in English and German (e.g., "Ich lese ein Buch") might appear alongside millions of other example sentences. However, this becomes a problem for low-resource languages, which are languages that do not have large amounts of data available to use as examples for training NMT systems. A prime example of a high-resource language is English: the dominant language of the internet, translated to and from countless other languages, and which accounted for 56% of all web content in 2014. Meanwhile, with over 7,000 languages worldwide but only an estimated 165 used online, there are bound to be thousands of low-resource languages, for which high-quality translation training data are sparse, or even non-existent. Without sufficient data to learn from, NMT produces subpar translations to and from low-resource languages. In order to overcome this obstacle, researchers at the University of Southern California created a transfer learning method in 2016 to improve NMT performance in low-resource languages. The main idea is that by first teaching a "parent" translation model to translate between two high-resource languages, a "child" model will be better able to learn how to translate between a paired high-resource and low-resource language. We can analogize this to the fact that after a person has learned French, it might be easier for them to learn one of the other Romance languages, since they are related languages and share similarities in vocabulary and grammar. Similarly, the researchers found that when they transferred some of the information learned by a parent model to a child model, they obtained better translation quality than NMT models that did not use transfer learning. The boost in performance was even more pronounced when the high-resource and low-resource languages used in the parent and child models, respectively, were more similar to one another. There remains plenty of room for improvement to machine translations across the board, as indicated by the simple fact that human translations are still the gold standard by which machine translations are judged. Nevertheless, transfer learning has potential to broaden the power of neural machine translation to more languages around the world, allowing low-resource language communities to carve out their own digital spaces alongside dominant languages on the global stage. After all, knowledge—and therefore, language—is power.

0 Comments

Leave a Reply. |

Categories

All

Archives

April 2024

|